Drifting Into The Valley

At the I/O 2018 conference, Google shared a demo of Duplex, their artificial intelligence service (bot) "for accomplishing real-world tasks over the phone". Their demonstration gives examples of a bot making reservations to a hair salon and restaurant. The unsuspecting recipient converses with the bot as they negotiate times and details. This is an impressive advancement in conversational bots and clearly demonstrates the power of AI as a tool for task augmentation. It’s also a clear indicator that we’re entering a new relationship phase with this technology, one where the line between humans and AI become blurred.

While we’re still technically in the Narrow/Weak AI (context constrained) phase of artificial intelligence, the nuance and sophistication of natural language synthesis is now almost indistinguishable from a human. The ability for an AI to track multiple topics throughout a conversation is also contributing to a more human-like capability. While chatbots have been around for more than 50 years, often creating momentary illusions of sentience, we’re now entering a new era of conversational bots that can pass as human over the phone.

After listening to Google's demo, I was immediately struck by the use of “um” as a conversational filler. I’m guessing the presence of a filler tested well—providing a more human-like feeling, but um is annoying to me. It’s taken me years to reduce the use of um from my vocabulary, and now it seems it's being adopted as a part of Google's bot lexicon. A few years ago I was researching “bot etiquette” and I hadn’t anticipated we’d be perpetuating speech disfluency, but here we are. I'm sure the future of this technology will quickly adapt to a diction appropriate for each “owner”.

I was also struck by the idea that the human recipient of the call was unaware that they were conversing with a bot. While I was working on AI-related projects at Microsoft, we looked at the effects of perceived deception from AI—the results of your brain realizing it's been fooled. This is a primary principle in the Uncanny Valley hypothesis—the “feeling” that something perceived as real actually isn’t, which leads to a dip in the human observer's affinity for the humanoid or experience. In our research, the only way to reliably avoid a deception event is to be transparent—fully disclosing the hidden truth. While some may feel requiring a bot to self-disclose at this point is being overcautious, but the negative impact of a perceived deception should not be underestimated.

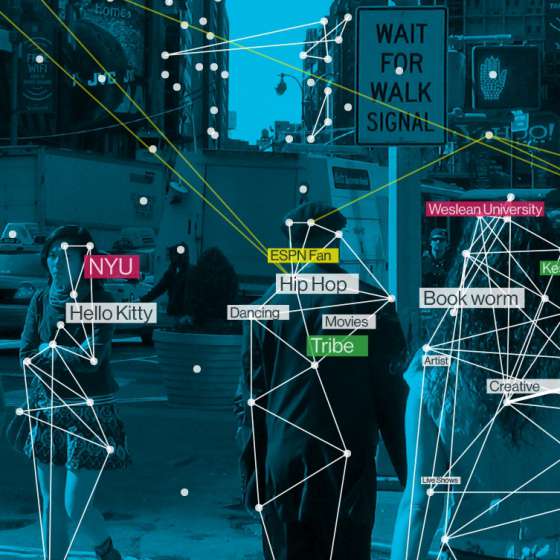

Extrapolating the use case into slightly more sensitive topics can also give us a sense of near-term moral and privacy dilemmas. For example, if the human were to ask the bot that they need a credit card to hold the reservation, the bot would need to understand the nature of the business trustworthiness and the user's intent regarding privacy. The user’s home address, family details, social security, work details, etc. could all be readily available to the bot, but when and why to share these details require a nuanced evaluation of each situation. When your bot answers a call from another bot, will it have the training to prevent a malicious phishing attack? There's also a good chance a bot would have better tools than most humans to recognize malicious behavior. Will we soon see a AI bot arms race, where we're damned if we adopt them and more so if we don't?

There’s an exciting side to to all of this, and it’s where Google’s Duplex product is surely headed—it’s about the creation of our personal digital proxies which will help us navigate the brave new AI-driven world. There’s even a chance these “AI proxy agents" will empower us to take back control of our data and leverage its true value. A well trained digital agent could negotiate an exchange of personal data—data not even Google has access to—for deep discounts at a retailer, instantly negotiate great insurance rates, perhaps even negotiate a new job salary. How much will we entrust in these intelligent agents? And who will act in our best interests? Personally I don’t have faith that Google will build my AI proxy agent with my best interests in mind—they have clearly shown their propensity for monetizing user data.

While we still live in the Wild West of AI, there’s a growing interest in avoiding major missteps that could result in widespread mistrust in AI and related fields. Last year, a group of leading AI and Robotics researchers got together at the Asilomar Conference Center in California and wrote an initial set of principles to help guide new developments—it’s a good start, but in my opinion misses some thinking around disclosure and transparency. For the next couple of years we’ll likely stumble our way along as we figure out how to cohabit with our new digital friends.

Posted

May 10, 2018