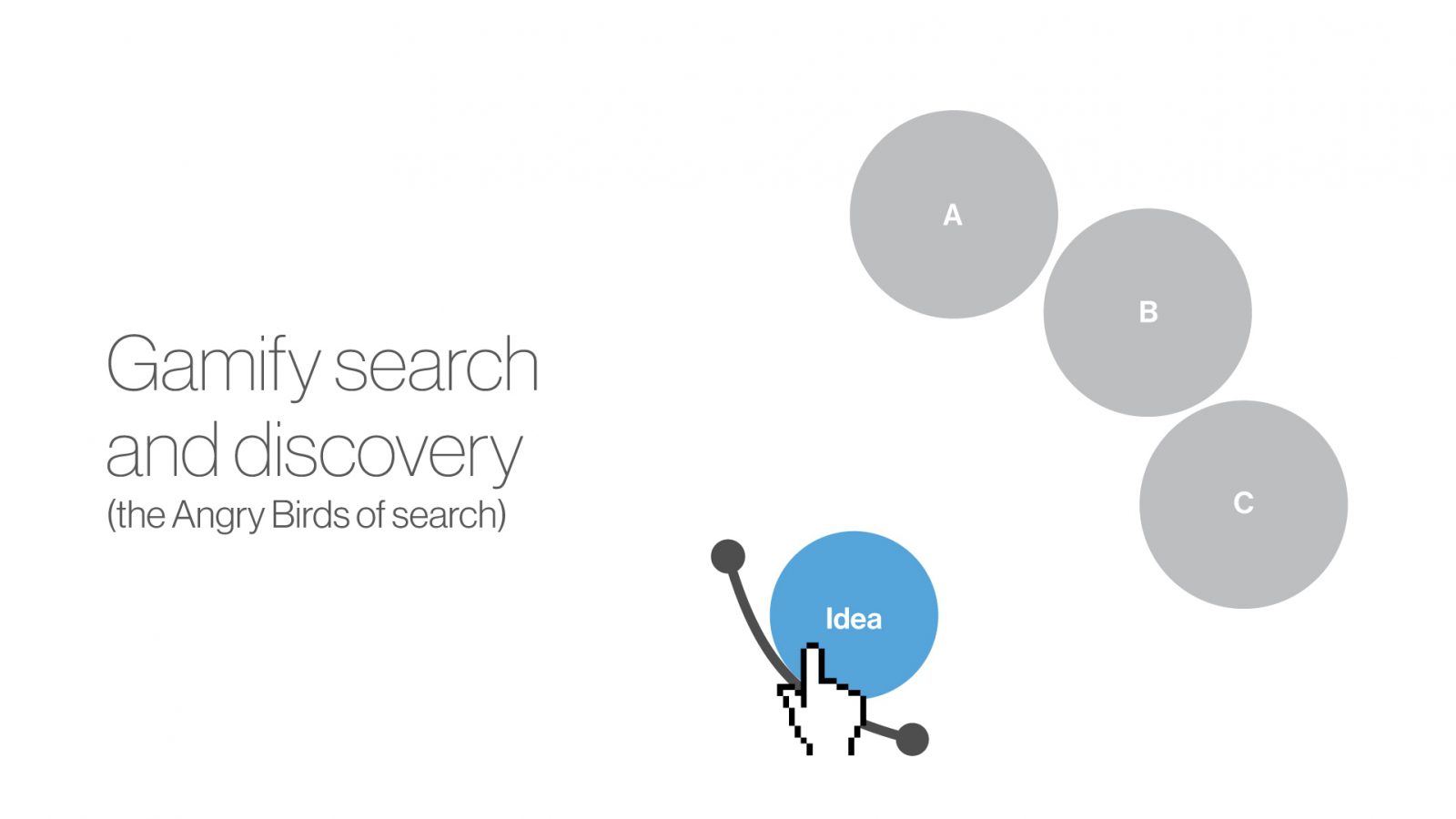

While at Microsoft I worked on a number of exciting projects dealing with "Intelligent Agents" (personalized AI services). For this project I was looking for a way to playfully engage with users to explore and discover unexpected connections between different topics or media. An initiative for the company at the time was to build Natural User Interface experience, or NUI, that would drive more interest and engagement for tablets, phones and touch enabled laptops.

Tangents

The project began a study of aggregating all relevant data from across application into a single representational space that could show relevance and relational associations. We called this project "Ego" and could see the power of breaking the silos that fragment our computing experience. While the concept was exciting, it wasn't feasible. But this led to some very interesting conceptual ideas which led to a patent.

Fluid Dynamics

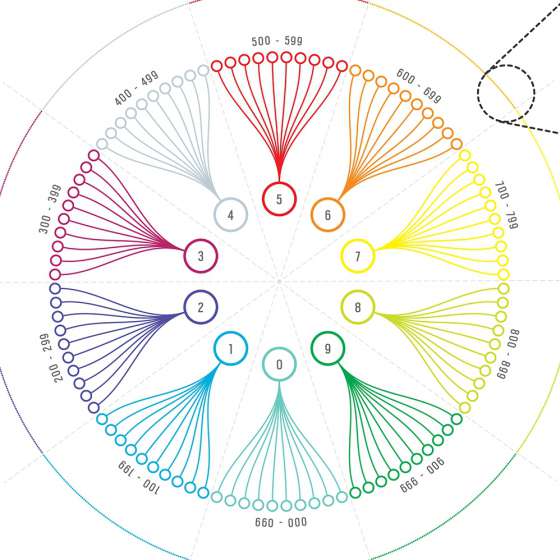

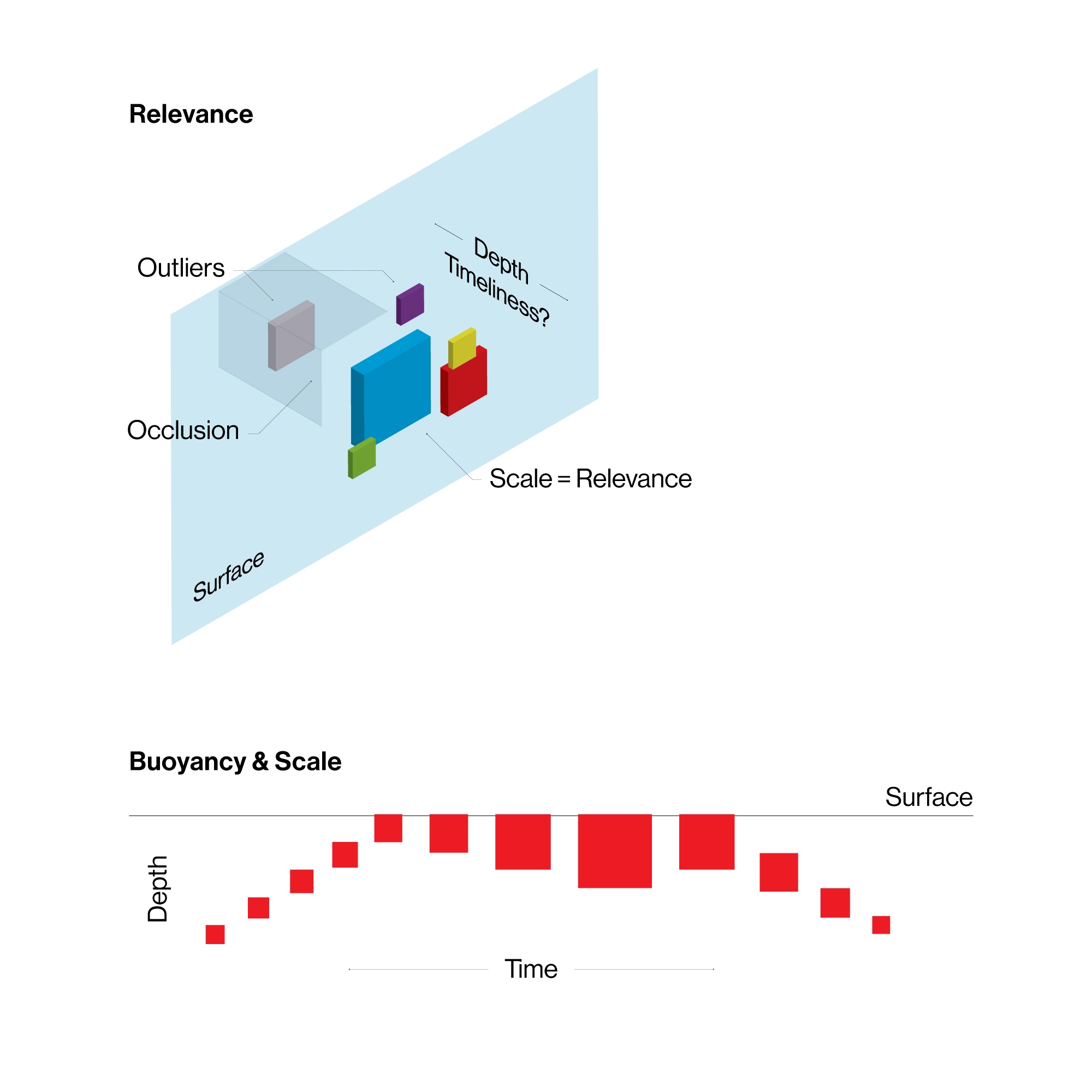

The primary mechanism driving the action and reaction of the interface is a fluid dynamic model. This approach suggests a physicality of data representation through both scale and buoyancy as a representation of relevancy.

Scaling, Composition and Decomposition

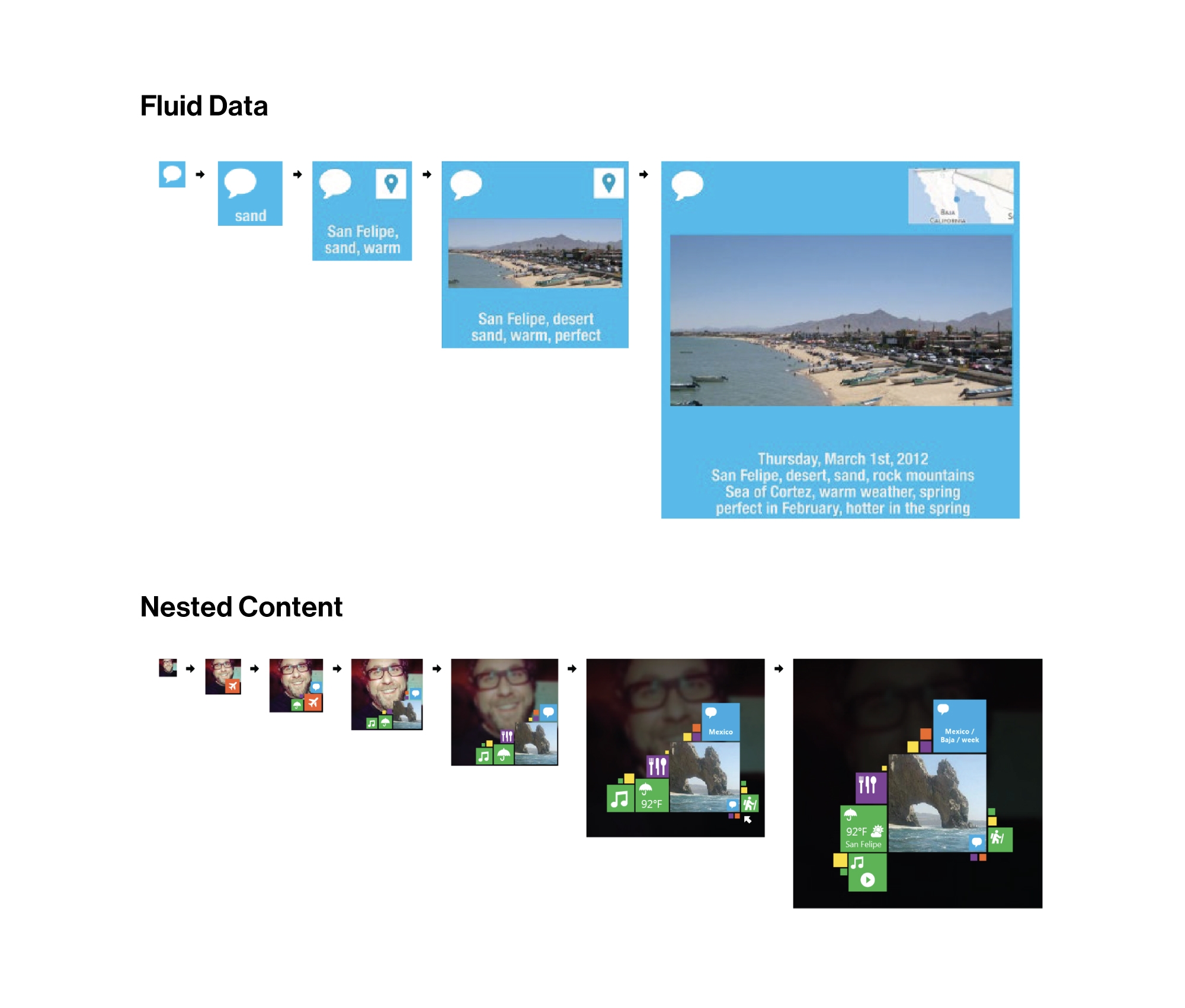

One of the core principals of the EGO interface is the reflection of relevance in the scale of tiles. A clear opportunity is to capture clear semantic meaning in every tile, regardless of scale. To do this and maintain the organic nature proposed, a method for fluidly scaling the semantic language of the tiles is needed. The following are illustrations of fluid semantic zoom at both a single tile level as well as nested content within a tile.

Spatial Representation and Experience Boundaries

The experience is either in a walled box where the user is able to see the complete world in a single view (God view), or the experience extends beyond the edges. The former implies that content generally displaces other content in order to gain visibility. There is also an opportunity for a hybrid view, which uses the single view, but momentarily zooms in to content. If the experience extends, there will be a need to represent wayfinding and spatial navigation.

Engaging and Disengaging

The system is living and dynamic, reflecting the aggregate activity surrounding the user. Ideally the experience presents a view of this data in a way that intuitively and clearly reflects relevance and meaning. But what happens when the user influences the presentation? On one hand, the interest in data should change its relationship and possibly make it more relevant, but on the other, it seems problematic to alter the natural state of the relationship. Can we drag an object? Rub it? Tap on it repeatedly? If the goal is to influence relevancy, is there a way to do so that is not confused with looking at it to better understand what it is?

Beyond simply expressing interest, the act of engaging (consumption, clarification, correction, etc.) needs to be an intuitive and primary interaction model. If touching an object fully engages it, which would be the most intuitive approach, then secondary forms of engagement become difficult. Zooming (pinch/zoom) seems to be the obvious secondary choice for engaging in content, but it can also be challenging to target specific items within an organic UI.

Mapping Analog

The ability to scale, and navigate an information “landscape” could be seen as an analog to map-based interaction. Some of the opportunities and challenges for this include:

- Maps and data can be interchangeable/merged

- Wayfinding becomes critical in understanding context

3rd Party Content Modeling

One danger of content aggregation is the expectation for experience parity with the 3rd party services. For example, if we aggregate Facebook, to what extent do we display content or allow the user to interface with the services. Replicating services can become a large development effort both up front and ongoing. Setting parity expectations low is a recommended approach since it will allow more upfront development for the interaction and intelligence components.

On to Tangents

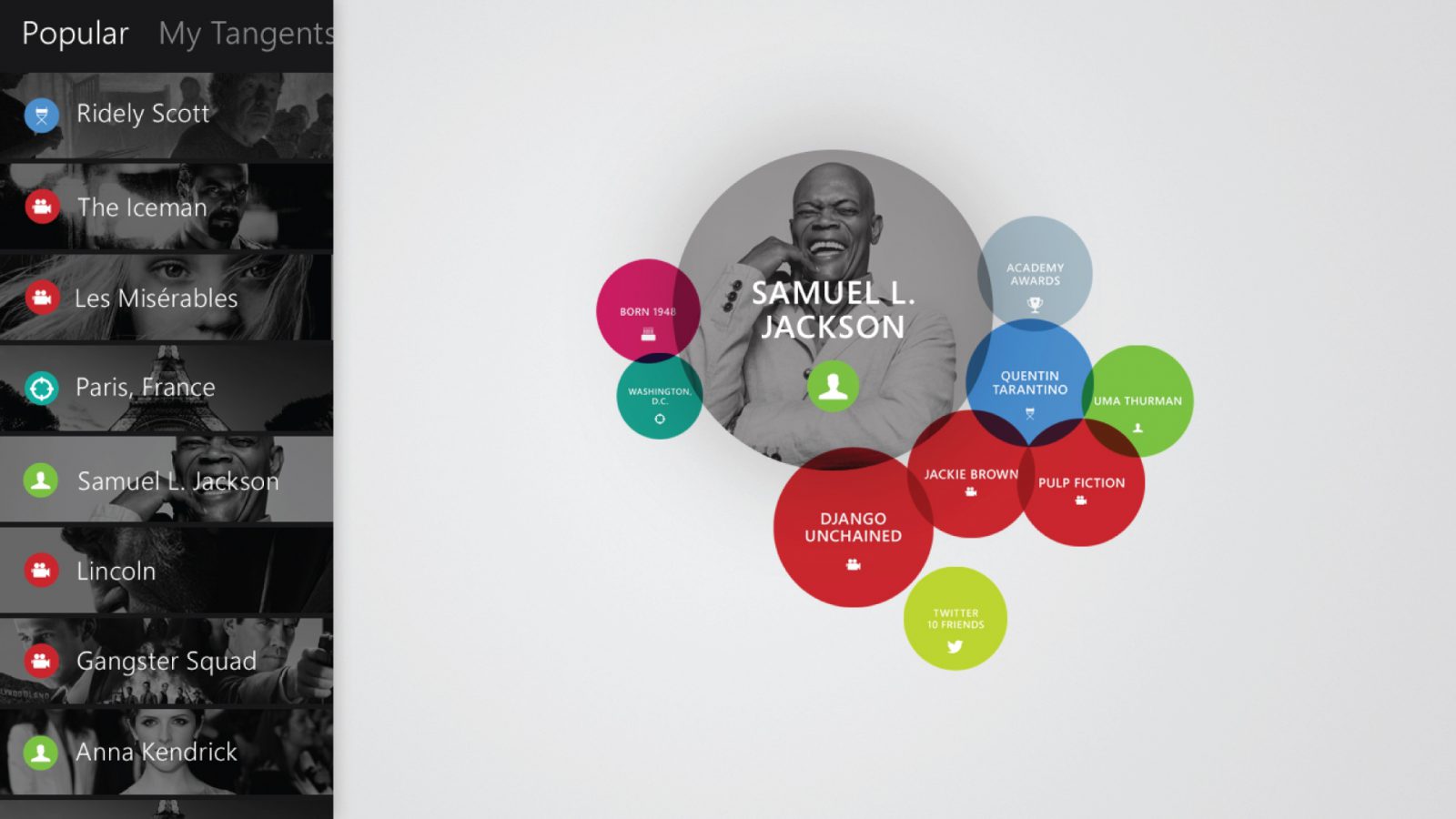

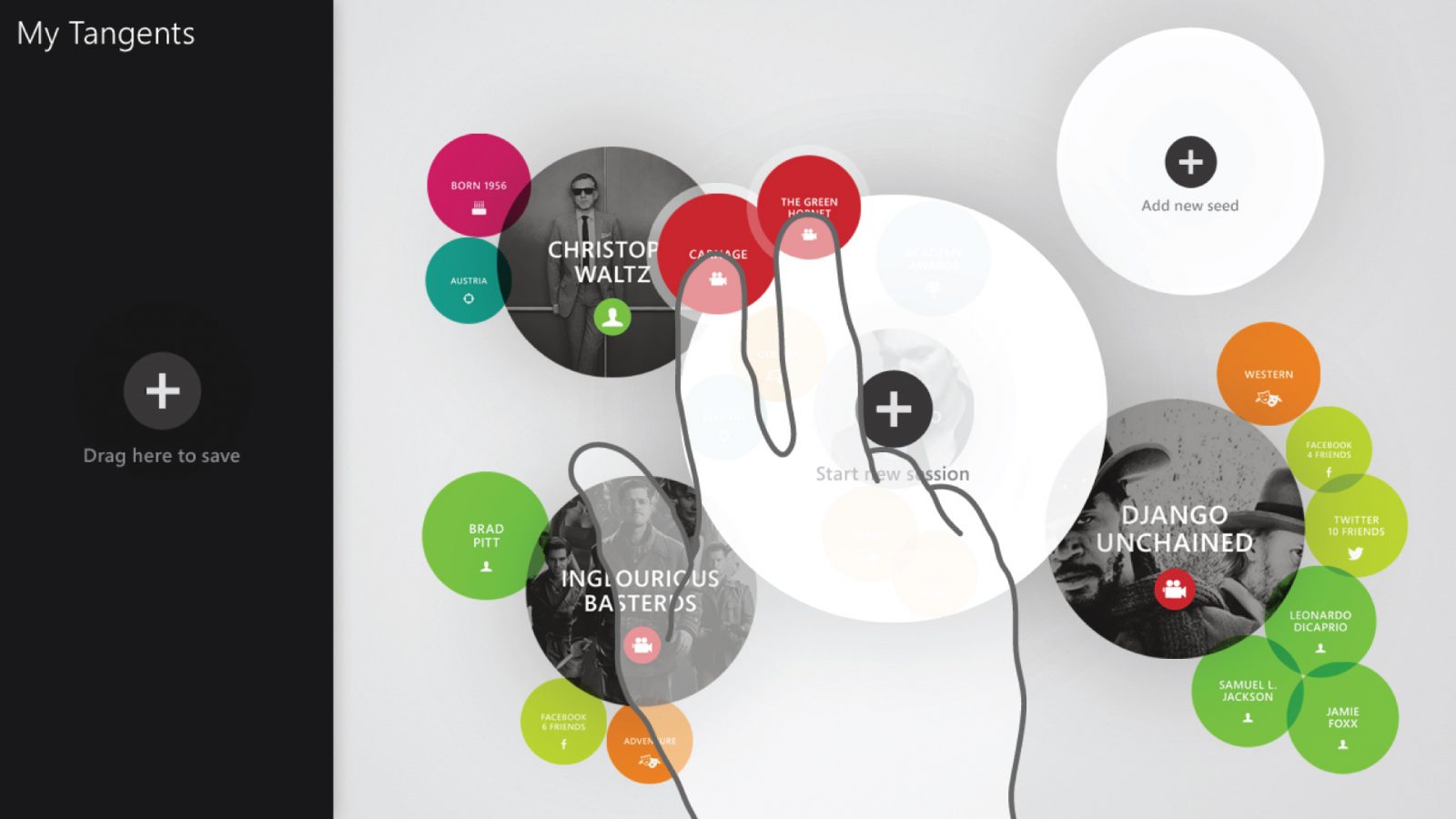

When we realized the vision — basically a replacement/augmentation of the Windows desktop — would be impossible for our team to prototype, we took the most compelling ideas an applied them to a subject I love: discovery. We started with the subject of movies and thought about what we could learn if we aggregated all known data into a fluid experience and surfaced the data points with the highest relevance. Then, if we looked at multiple movies or actors together, we could see where relevance would intersect.

The result was a playful and addictive experience that surfaced delightful and unexpected insights. We leveraged some interesting data sources including: Microsoft's Knowledge Graph, Watchwith (a startup logging frame-by-frame contextual data on movies), Wikipedia, IMDB, IMCDB (Internet Movie Car Database), IMFDB (Internet Movie Firearms Database), and many others. We unified all the data against the IMDB ID (since all sources referenced IMDB).

This is probably the most difficult project I've ever worked on — because every time I run a test, I get lost in the experience for a half an hour.

- Chris Miles (software developer on my team)

We built a fully working prototype and proved the value of the concept. We looked at other subjects to explore, such as music, books, travel, social, and more — they were all compelling experiences. The project joined the countless other visionary software ideas in Microsoft's own Hanger 51.