A founder walks into a bar. Ouch!

Or: It's all about the context.

The recent AI boom is fueling a hardware revolution. Whether people want AI-everything is open for debate, but the opportunity space is exciting.

A few of the early movers in the AI consumer space, Humane’s Pin and the Rabbit R1, recently shipped their products. The reception has been generally negative. Don't feel I need to contribute to the list of gripes, but I do have thoughts on overlooked opportunities. What’s potentially a paradigm shift is being sold as an upgrade, and it's missing the point.

A quick recap of how we got here

While ChatGPT helped push AI awareness mainstream, the past 20 years have been a golden era for data science and machine intelligence (problem solving machines). The value of AI is almost entirely the domain of prediction, whether surfacing patterns in behavior or recognizing a pattern in pixels or sound waves. With enough training data, it's possible to understand and organize patterns.

Transformer architecture unlocked the current Large Language Models we now enjoy from OpenAI and others. There’s a long evolution that’s led to this point going all the way back to the 1960s with the ELIZA chat bot. It’s fun to look at projects like Dasher (see video below) because they show how predictive language models work in a primitive way. It’s also easy to forget how mind blowing Siri was when released back in 2011.

Dasher is an experimental predictive typing app

Today’s AI unlocks general intelligence (not necessarily AGI — that’s a debate for another time). It enables perception — the ability to factor multiple signals/contexts into a generalized understanding of the problem or question. How contextual understanding improves relevance was a reoccurring theme in my work at Microsoft, so I'm going to geek out a little.

Context is worth 80 IQ points.

- Alan Kay

Contextual understanding — the big unlock

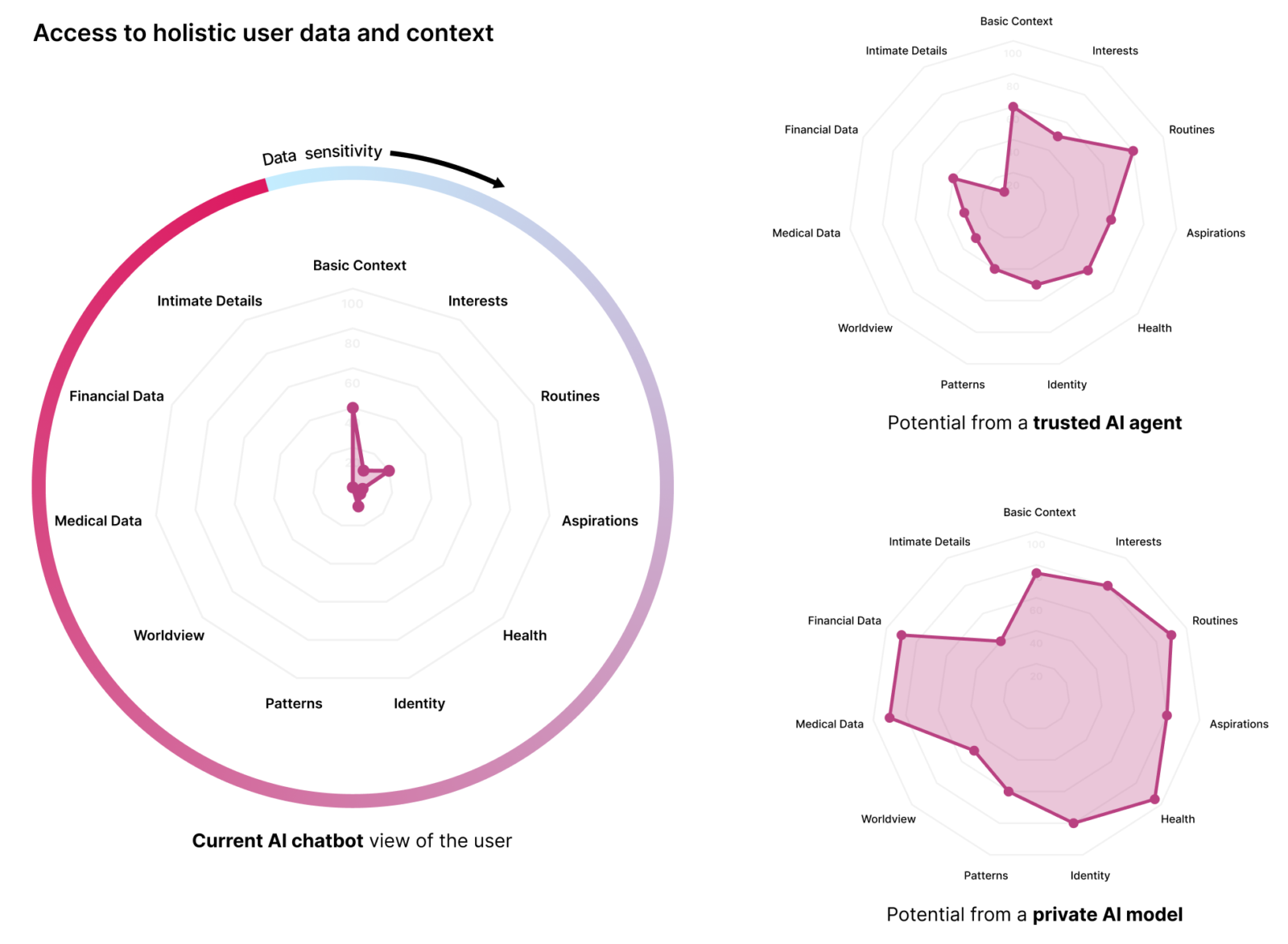

It’s difficult to understand what someone wants without understanding their context. Presently, most AI apps have a tiny view of the users’ context, which results in generic results. And while companies like OpenAI are trying to expand their contextual understanding with features like “Assistants” and “Memory,” the path towards a “full” contextual view is slow and daunting. Google, for example, has struggled to leverage the rich user data they posses because they don’t have explicit permission to use much of it (and they shouldn’t).

A long-running Microsoft project called “Personal Cloud” sought to leverage user context as a form of owned currency. I’ve been tracking other projects that explore this idea of data sovereignty as a service. One is by a company called Vana, who claims to be “the first network for user-owned data” — not sure I believe that, but it may be the first one to scale the idea. They have an open-source project called Selfie that runs on your local device and serves a personal RAG (Retrieval Augmented Generation) LLM based on your data. I’m currently using Selfie to prototype a highly personalized, personal agent.

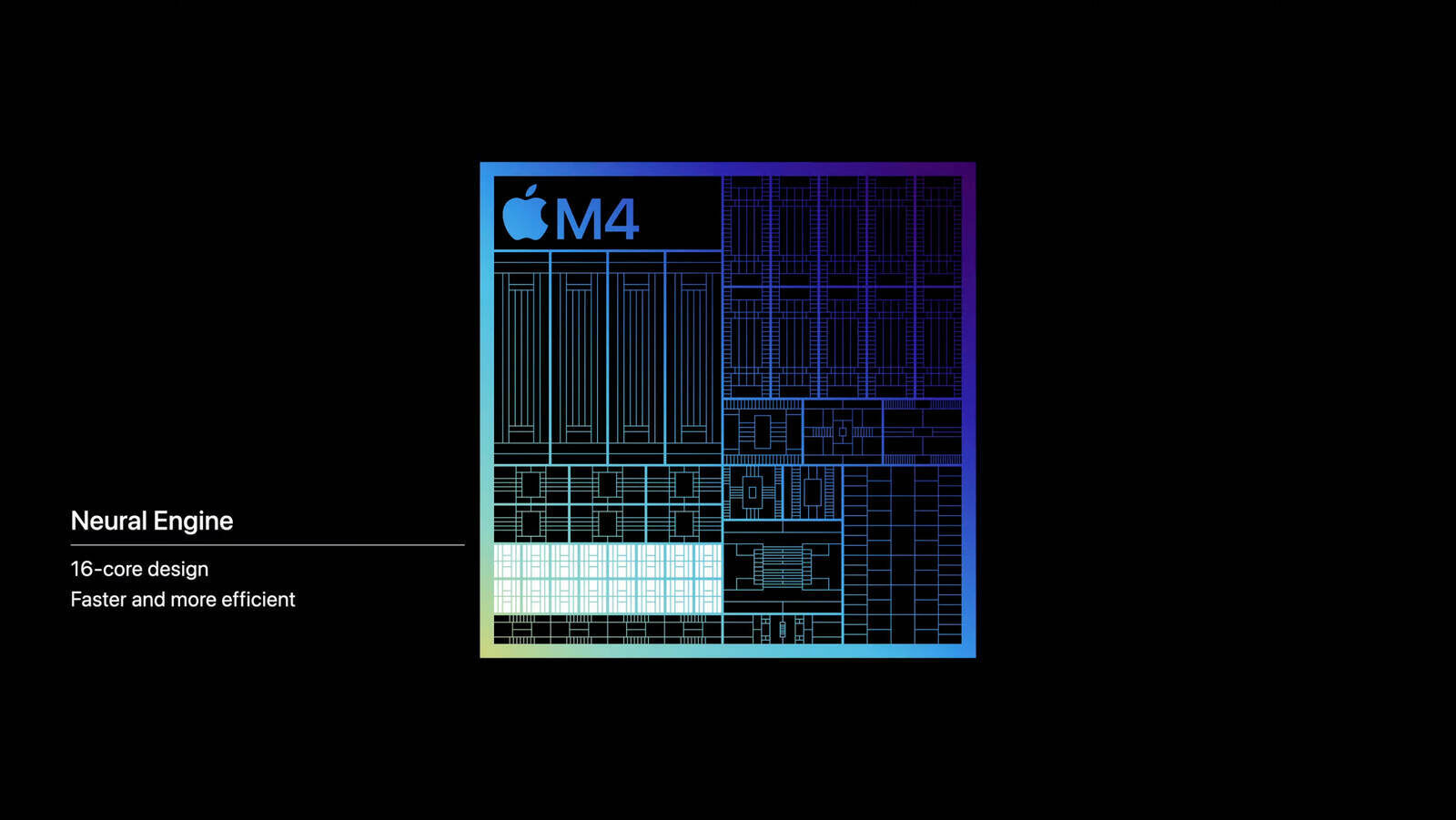

I’m also tracking Apple’s increasingly powerful Neural Engine that's in all their recent devices. It seems Apple is adopting a strategy we promoted at Microsoft, which is running language models "at the edge" (specifically on your device), along with a gatekeeper agent. What this unlocks is a highly secure and private AI service that’s deeply personalized across the app ecosystem and attuned to changing user context. It’s the holy grail (almost).

Back to the AI devices, and why it’s all about context

Let’s start with Humane’s Pin. It seems they overestimating how well AI would perform at the time of launch, which led to horrible reviews and ridicule. But I believe their biggest failure was positioning the device as a phone replacement, e.g., what you had with your phone, you can now have without your phone. I actually like their screen solution, but not as an alternative way to browse friends’ Insta posts. They created the wrong mental model of why this product deserves to exist.

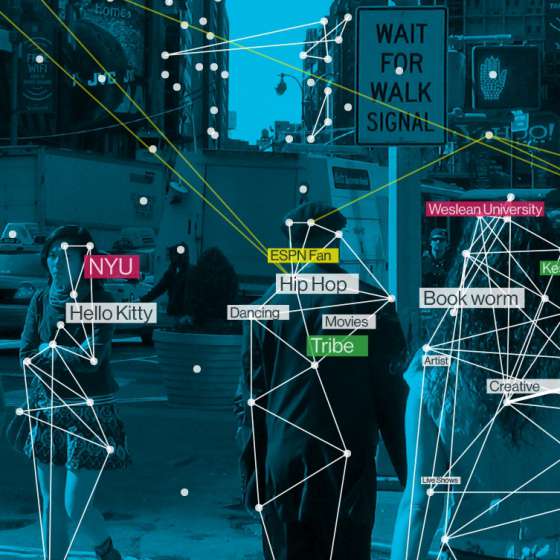

The Pin form factor is 100% about contextual understanding. It’s the eyes and ears (maybe a little touch) of the AI service. Maybe that sounds creepy, but context is everything, and your phone/watch has very limited understanding of the important sensory cues you are experiencing — what you are seeing, hearing, what direction you're facing, whether you’re sitting, standing, riding a bike, skateboarding, are with friends, in a crowded room, or by yourself. These are all powerful signals that give AI IQ.

If I were leading Pin’s product/marketing strategy, I’d be trying to beat Apple to the punch. Pin could be an intimate AI that understands who you are, what you see, what you hear, and how you feel, without you having to worry about anyone accessing your data. It could be "Her."

Another device that just launched and receiving mixed reviews is the Rabbit R1, designed by Teenage Engineering. I also feel they likely overestimated AI progress by launch date.

Like the Pin, Rabbit forgot to “start with why,” leaving everyone scratching their heads a little. I believe Rabbit’s “why” is the question of how AI mediates the existing world of UI that was designed for humans. They are the first company I’ve seen to articulate the problem and solution of UI abstraction, which they tout as Large Action Models (LAMs). In the end, LAMs likely won’t be an effective moat, but it’s an important new paradigm.

There are other AI-native devices of note about to launch, including Brilliant Lab’s open-source Frame AR glasses and IYO’s VAD spatial computing ear buds (see video below). Both these entries unlock context awareness — one focuses on sight, while the other on sound. The question is how they capture consumers’ imagination. I believe the products that figure out how to humanize AI (e.g., amplify, not replace, human experience) will gain the most traction.

IYO's CEO Jason Rugolo's TED talk on audio computing

The path forward

I’m eager to learn more about Apple’s entrance into the agentic personalized AI space. An effective, highly personalize Siri and development ecosystem could be a game changer. But I suspect Apple will slow-roll the features and lean on developers to validate ideas before they sink money into core features.

In anticipation of Apple’s new capabilities, I’m working on a fun personal AI project using Vana’s Selfie, built in Swift, and supporting the Brilliant Frame AR platform — stay tuned :)

I'm always curious to hear what others are thinking. Do AI-powered products appeal? Is personalized AI interesting? Or do all these ideas overstep privacy and ethical lines in the sand?

Posted

May 9, 2024