Customer Loyalty on AI

In 2015 I pitched an idea to Starbucks for an AI-powered loyalty offering that would deliver gamified nudges that were personalized for each customer. The vision came in large part from behavioral adherence research I was doing with Kaiser Permanente and a workshop with Stanford behavior design professor BJ Fogg. Along with a crack team from BCG, we delivered a compelling pitch to Starbucks leadership and landed a $30m investment to build a new type of loyalty platform. We created a company called Formation and assembled a rockstar team.

We delivered on the promise — we actually blew expectations out of the water. Our offers delivered $250m of incremental value to Starbucks in the first year, and became recognized as a new category and leading example in customer loyalty. Soon after we designed United Airlines' loyalty offering.

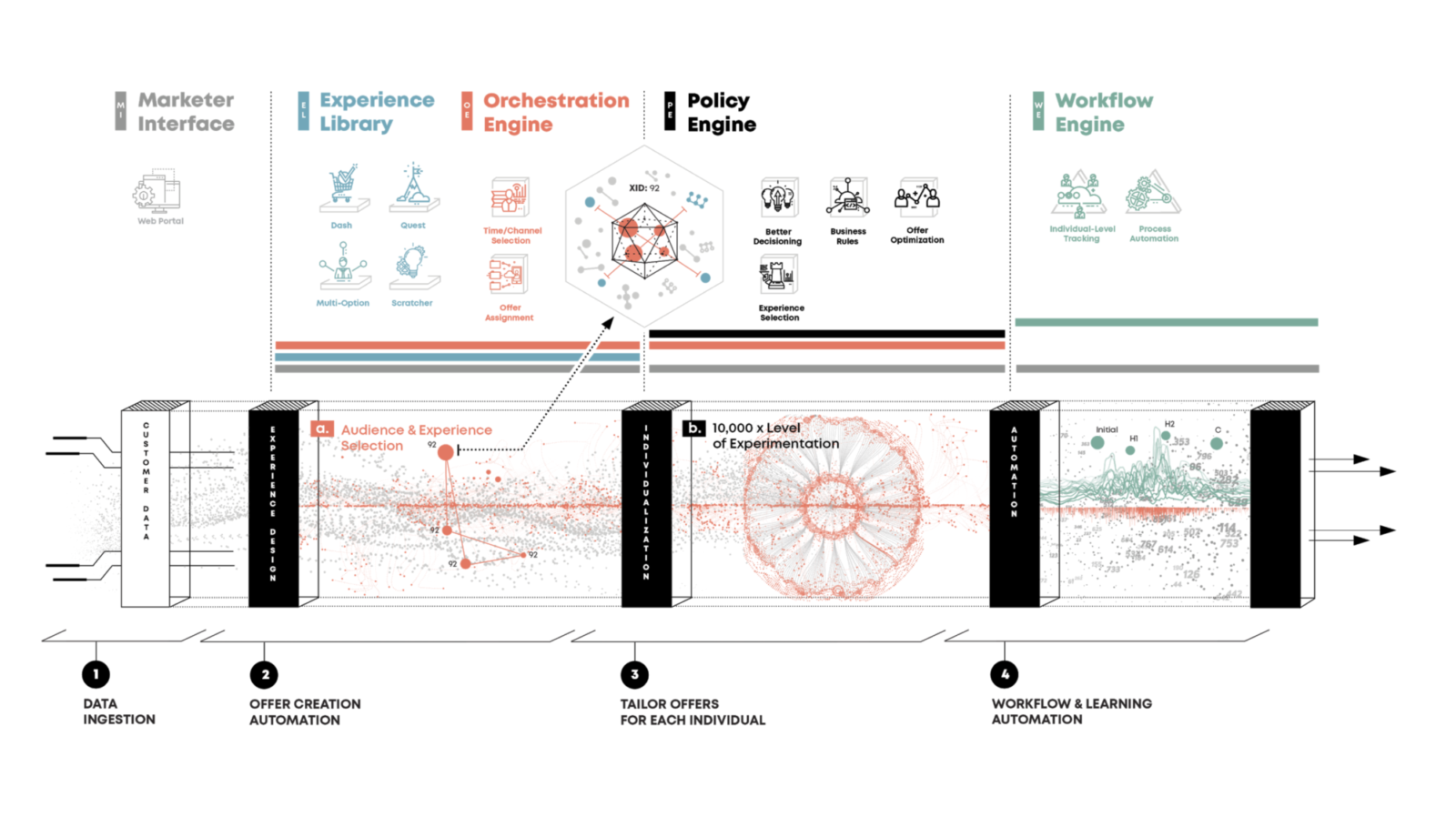

AI in 2015 was a different animal than it is today. We deployed machine learning (ML) and reinforcement learning (RL) at scale — ingesting more than 10 million transactions daily and delivering 30+ million individualized offers multiple times per week. This was a complex and expensive operation — our monthly AWS bill often exceeded $500,000. If you're interested in all the details, I've written about the journey here.

I was recently invited to speak to a room full of creative agency leaders with my friend and colleague Wayne Robins. We decided I'd play the AI optimist and he'd play the pragmatist. A bit silly, but these days it's easy to flop between optimism and pessimism — the duality is strong with AI. I didn't want to talk about AI creativity tools, so I shared my experience building Starbuck’s loyalty program, and how AI completely changes the game. It landed pretty well, so figured I'd articulate it here.

I'm not a data scientist (I took Andrew Ng's intro ML course, so I understand the basics), but found the problem of predicting purchase behavior fascinating. In many ways, Starbucks was the perfect candidate for ML since engagement was very high and the product variability was low. There was also strong and already known correlations between purchase behaviors, and also a tremendous opportunity for nudging new behaviors, or as BJ Fogg might say, motivation and ability are inherently high — all you need is the right prompt (trigger).

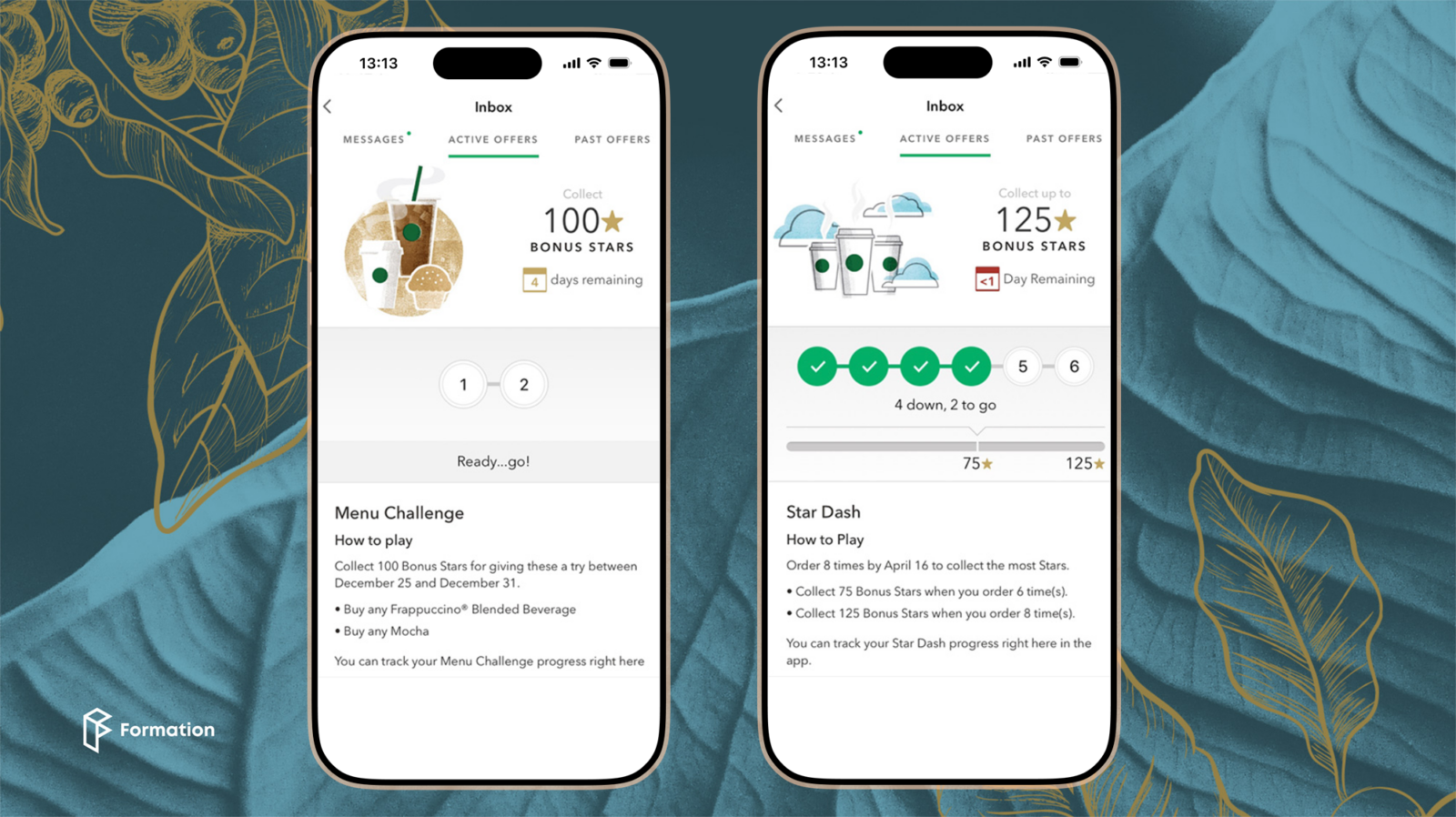

Our solution worked well because we nailed the fundamentals: perceived value, relevance, gratification, and simplicity. The offers themselves were a masterclass on behavioral psychology. Although they seemed simple, they tapped some powerful instinctual motivations such as the Zeigarnik Effect, which is the desire to complete unfinished tasks. Another is the Transactional Utility Effect, which is the feeling one gets from a "good deal" or saving money.

The bulk of the value from personalized offers don’t actually come from the purchase predictions — they come from past purchases. The most powerful driver in incremental behavior is aligning past purchases to a reward. For example, the offer might be: buy a latte, breakfast sandwich and Frappuccino and get 100 stars. The customer likely already gets the latte and breakfast sandwich each week, so the important part of the offer is a Frappuccino for 100 stars. 100 stars is almost a free Frappuccino, so the psychology is that you'd be a fool to walk away from a free Frappuccino. Most of the data science work was predicting the incremental purchase and reward value needed to nudge the new behavior.

This is where generative AI changes everything.

Large language models (LLMs) power the generative AI applications we've all become familiar with (ChatGPT, et al.). LLMs are the most powerful generalized prediction machines ever built. While they're very good at predicting what words to write, they're also reasonably good at predicting likely actions, like what product you might be tempted to buy.

Let's look at the basic ingredients of the product we built for Starbucks:

- Ingest customer purchase data (product, time, day, frequency, co-purchases)

- Predict NBO (Next Best Offer) for each customer

- Purchase propensity modeling for each customer

- Calculate personalized reward value for each customer

- Construct personalized offers and deploy to 30 million customers

Our product in 2015 cost around $5m to create and $10m a year to operate (staff + service costs). While the predictions were sound, the entire model is built around the expectation that people are creatures of habit and would generally repeat behaviors. This solution worked well for Starbucks, in part because of the highly habitual customer behavior.

Now let's look at how we could build a loyalty product that works better, for a broad range of products and services, and for a fraction of the cost.

We'll start with how LLMs replace the monolithic ML decision engine. Rather than suck everyone's transaction into the cloud, the offers are constructed in realtime on the customer’s phone. The phone not only stores past purchases, it can incorporate active context (e.g., walking into a Starbucks at 11am near your office before a meeting) and analyze behaviors that could inform your next offer. Now an offer can be created that’s highly relevant and optimized (e.g., here's a lunch offer, oh, and BTW, pick up some treats for your co-workers and get stars).

This is how it works:

- An LLM service like Anthropic Claude provides an enterprise endpoint (API).

- The model is given an inventory of product and services.

- The model is trained on the concepts of purchase behaviors, propensity modeling, reward variability rules, objectives, etc.

- Additional specialized services are created to deal with specific calculations that are a weakness for LLMs.

- The company's native app (i.e., Starbucks app) stores past purchases, visit history, etc., and can also grab realtime context data like location.

- Through a geo-fence or an app-open event, all the relevant data is packed up and sent to the LLM. It then returns a package of data that can be used to assemble an offer on the fly.

The above scenario is one to two months of design and development work for a four person team using off-the-shelf LLMs (+/- depending on team/environment). $6m vs $300k (to reach MVP) — crazy! There's a new cost, which is the LLM, but it would be nominal — likely the same cost as delivering an email.

Other things to consider include how to measure the incremental lift of the offers, which involves holdout or "control" groups and engagement metrics. Adding the ability to deploy to cohorts unlocks continuous optimization and experimentation (something we struggled with at Formation). Dynamic/personalized offer design and motivationally aligned rewards were capabilities we dreamed of, and are now in reach.

Another opportunity is a trend towards "edge AI," which means models are run on the phone rather than cloud. Apple is a champion of this paradigm, with Android quickly following suit. My old boss Blaise Agüera y Arcas, now an AI leader at Google, has been a longtime champion of what we then called device-side services (DDS). Edge AI simultaneously solves issues around privacy, cost, latency, and general data sovereignty — an empowered stance on data ownership could lead to a much richer exchange of contextual data.

The value of LLMs as personalized prediction machines seem highly underutilized or appreciated at the moment. The focus on text and image generation has done a huge disservice to the perception of value in enterprise solutions. As it becomes clear that the current progress of LLMs is hitting a plateau, it's now time to reap the value of this incredibly valuable tool.

Posted

Nov 15, 2024