Human Agency in the Age of AI Persuasion

This guide is meant to be an overview/primer for anyone curious about the core behavioral engagement tactics used by most ad-driven tech companies, and the impact it has on us, our kids, community and society as a whole. I’ll share a “Societal Benefit Score” to show the impact of the features used to drive engagement — there’s a societal cost to “free” products. And lastly, I suggest some paths forward to mitigate the negative impact.

As a parent of two teenage girls and an architect of a successful AI-powered behavioral nudging product (the Starbucks Loyalty Platform), I’ve been tracking the state of digital media engagement tactics. Much has been written and shared since Tristan Harris' The Social Dilemma movie and the Cambridge Analytica scandal. But those revelations seem tame next to what we have now, and what’s coming soon.

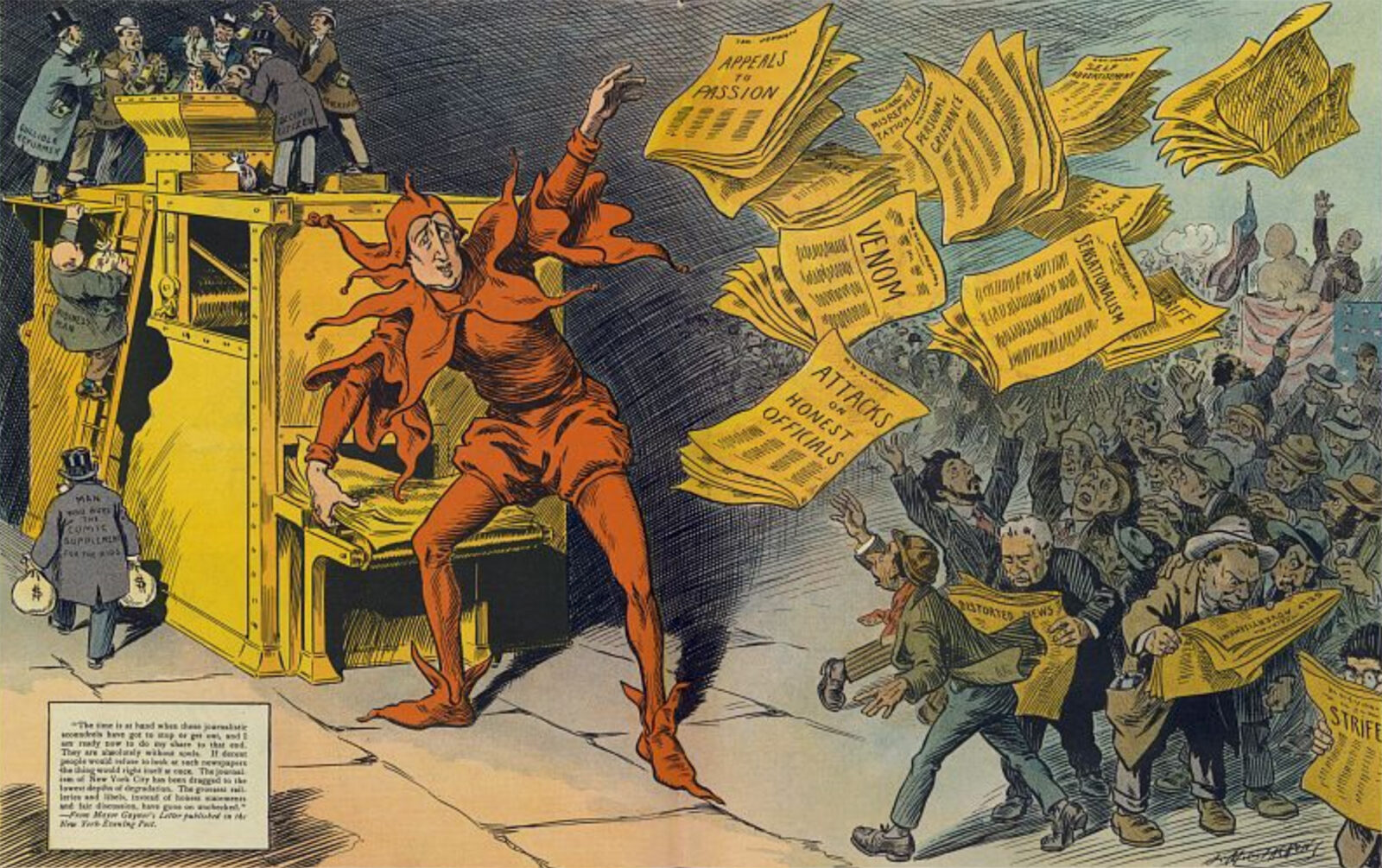

Relevant today — from 1910, media mogul Hearst (as jester) tosses inflammatory headlines to crowds (leading to assassination attempt in background) while businessmen fund his printing press with money bags.

We‘re Living Through The “Attention Era”

"We are at risk, without quite fully realizing it, of living lives that are less our own than we imagine." -Tim Wu, "The Attention Merchants" (2016)

Unlike previous eras where, to capture one’s attention, it was limited by physical and technological constraints, today's digital platforms can continuously harvest our attention through sophisticated algorithms and psychological triggers. This transformation has profound societal implications — from how we spend our time and make decisions, to how we form relationships and process information. What makes this era distinct is not just the scale of attention capture, but its precision and pervasiveness, extending into every aspect of our daily lives through our constant connection to digital devices.

"There's a sense in which the attention economy can be read as kind of a distributed denial-of-service attack on the human will" -James Williams, "Stand Out of Our Light" (2018)

James Williams, a former Google strategist turned Oxford-trained philosopher, argues that the attention economy fundamentally conflicts with human goals and values. He contends that many digital technologies we use daily are designed to maximize the time and attention we spend with them, often using techniques that exploit our psychological vulnerabilities. In the early days of the web, the decision to monetize attention became the winning model over pay-to-play.

“If you're not paying for something, you're not the customer; you're the product being sold.” -Andrew Lewis (2010)

In the world of tech, attention is king. All metrics point towards maximizing attention. There have been few ethical guidelines around the pursuit of attention — not even for our kids, the poor or vulnerable. If a product could achieve 24/7 engagement, the tech industry would celebrate the ingenuity.

Reverse Engineering Survival Circuits

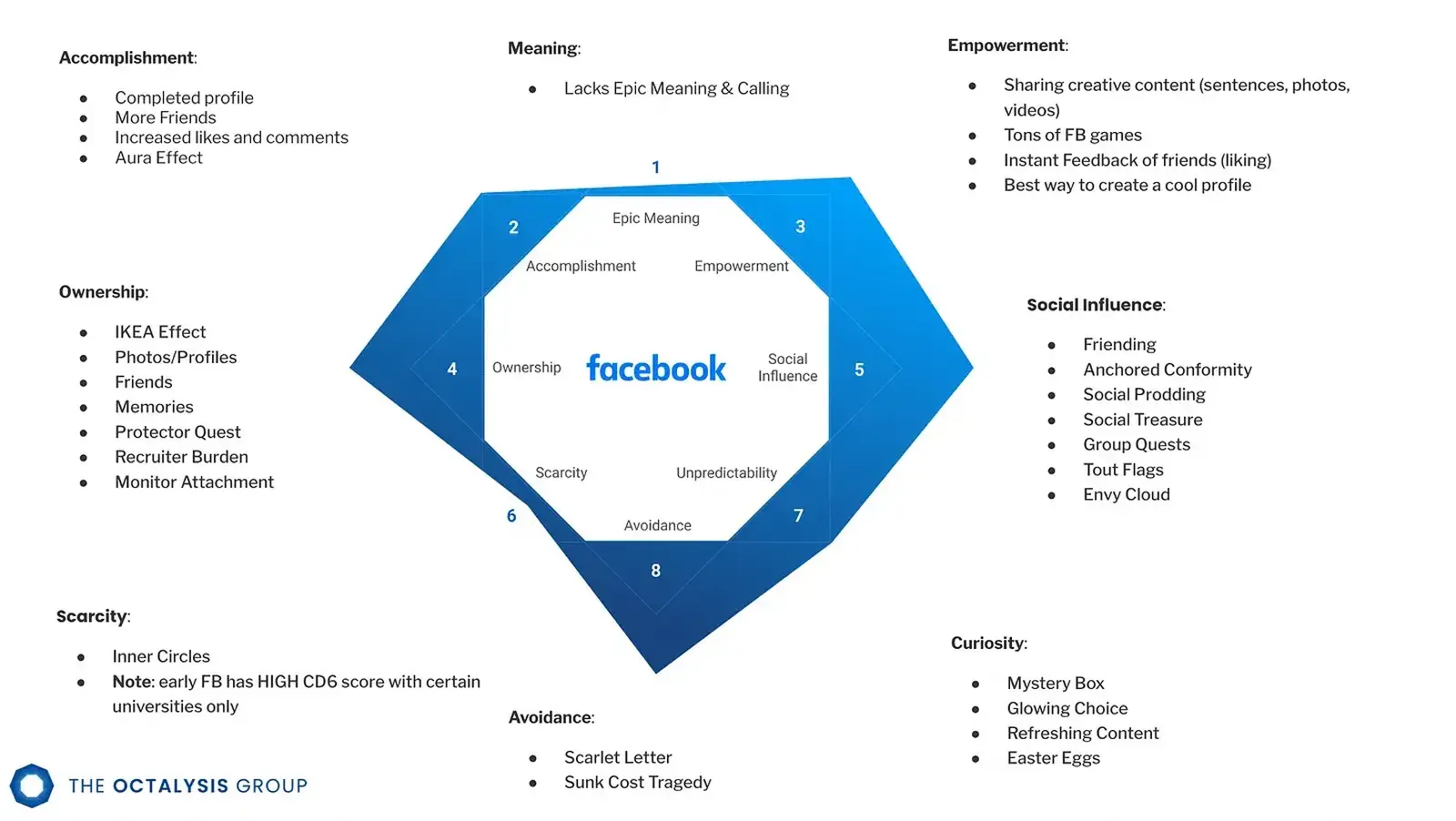

Attention drives ad revenue, and in the world of quarterly earnings and shareholder value, a perpetual increase in revenue is job #1. Optimization, A/B testing, and product experimentation-at-scale helped surface highly effective engagement tactics that leverage primitive brain functions. While there are multiple paths to engagement—some built on achievement and social connection, others on fear and anxiety—the more primal "dark patterns" often prove most effective at driving metrics.

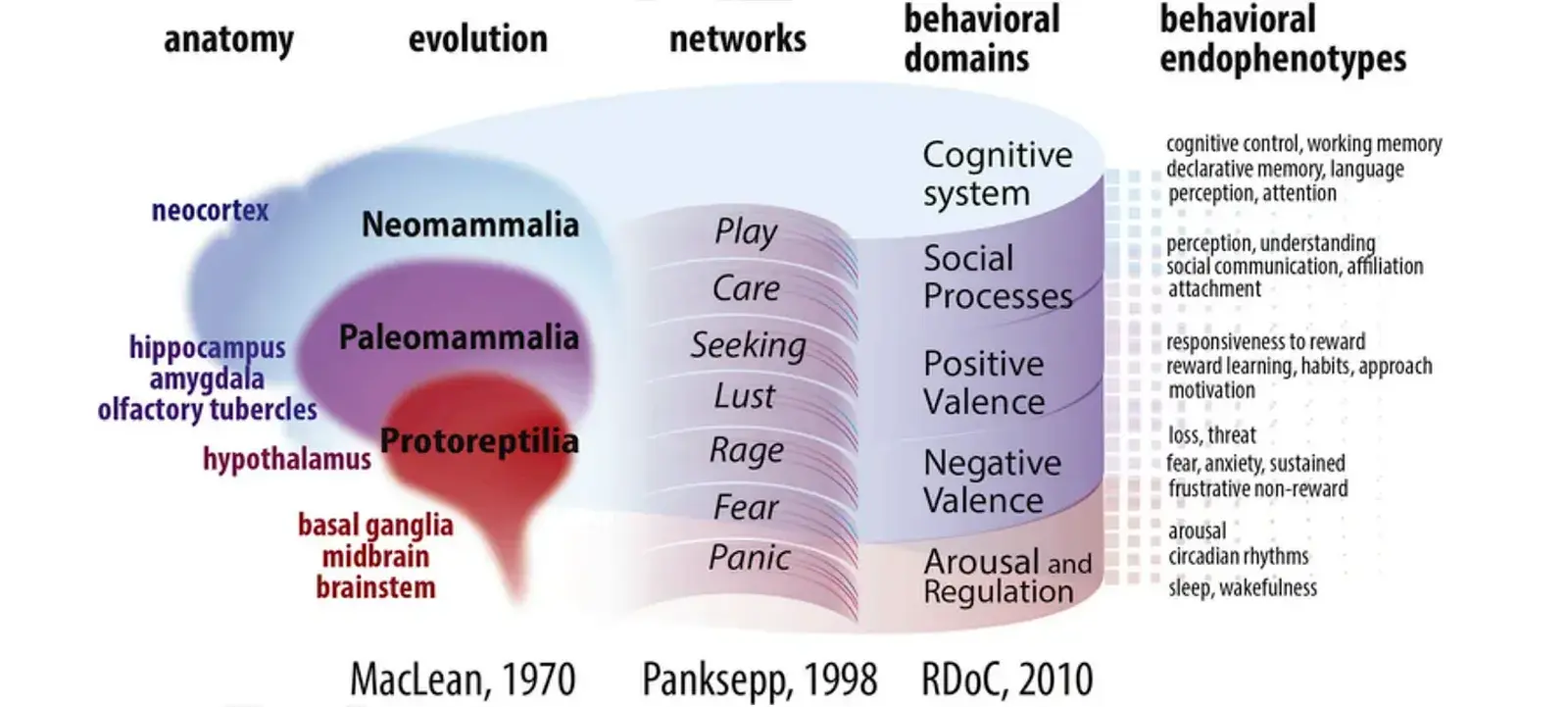

Jaak Panksepp was one of the first neuroscientists to map the connection between core emotional circuits and the ancient systems that evolved in layers from our reptilian ancestors (Protoreptilia) through early mammals (Paleomammalia) to modern mammals (Neomammalia). These insights reveal how social media platforms can engage both our primitive survival mechanisms and our more evolved social needs. For example, the "Panic" system, connected to the paleomammalian brain, drives us to maintain streaks and avoid losses. The "Seeking" system, rooted in ancient midbrain regions, compels endless feed scrolling. Meanwhile, the more recently evolved "Care" and "Play" circuits can be engaged through positive social interactions like meaningful comments and supportive communities.

These emotional systems are physically anchored in specific brain regions like the hypothalamus, amygdala, and basal ganglia, creating a direct line from ancient survival circuits to modern digital behaviors. While platforms can leverage these systems either way — either building users up through achievement and connection or hooking them through fear and anxiety — the latter often produces stronger short-term engagement. The anticipation of loss or social exclusion triggers a powerful stress response that, when combined with intermittent relief, creates a particularly sticky behavioral loop.

DAUs (Daily Active Users) remain one of the most important metrics for consumer apps. These are people who open an app every single day — often many times per day. This "stickiness" can be achieved through thoughtfully designed products that deliver genuine value. However, the most reliable path to high DAUs often involves tapping into our primitive survival instincts, as outlined in the RDoC (Research Domain Criteria) framework's behavioral domains. This framework, a deep initiative of the US National Institute of Mental Health (NIMH), explores the connection between brain function and mental disorders — connections that become increasingly relevant as we better understand digital behavior patterns.

Platforms Are Converging

Short-form video has become the latest strategy for maximizing sustained engagement. TikTok demonstrated how bite-sized video clips could hold attention far more effectively than scrolling through text or photos. Now, nearly every social and media platform have adopted “Shorts” or “Reels” to keep you glued. It’s so sticky because each clip is quick, stimulating, and rewards your brain with a steady stream of micro-entertainment. Before you know it, you’ve been watching for an hour, captivated by the promise that the next short might be even more entertaining than the last.

Meanwhile, the driving force behind all of this is a wave of self-optimizing algorithms designed to maximize engagement (and, in turn, monetization). These systems watch your every click, swipe, and pause, then serve more of whatever triggers the strongest reaction — even if it thrives on outrage or FOMO. This naturally steers users toward dark patterns, since fear and controversy generally generate more interaction than feel-good content. The social implications can be huge: filter bubbles, misinformation, and a constant escalation of emotional stakes. It’s a vicious cycle where engagement metrics (like watch time or shares) take priority over well-being, leading to the troubling landscape we see today.

Societal Impact

"A major commitment to television viewing – such as most of us have come to have – is incompatible with a major commitment to community life." -Robert D. Putnam, “Bowling Alone” (2001)

While technology has transformed society in numerous positive ways, social media and attention-based platforms present unique challenges to community bonds. Robert D. Putnam's "Bowling Alone" presciently identified how technology could redirect attention away from local community engagement. Modern social media has accelerated this shift — not because technology itself is harmful, but because engagement-optimized platforms naturally compete with deeper forms of social connection. While these platforms excel at enabling lightweight interactions and global reach, they often optimize for metrics that don't capture meaningful social bonds. The result is a paradox where we're more connected than ever digitally, yet spending less time in the kind of sustained, local relationships that historically built social capital and community resilience. This outcome stems not from malicious intent, but from product decisions that prioritize engagement metrics over social cohesion — a choice that may have long-term societal implications we're only beginning to understand.

Polarization

Many social platforms rely on algorithms that feed you content you’re most likely to engage with, which can push you deeper into a bubble of similar opinions. For instance, during elections, platforms might serve you only the political content you already agree with, reinforcing your beliefs and distancing you from other viewpoints. Over time, this can cause real-life divides, with family members arguing at the dinner table because each side is sure they’ve got the only “true” version of reality.

Extremism

Because polarizing and sensational topics get more clicks, fringe ideas can quickly gather momentum online. We’ve seen small groups make a big impact, from radical political extremists who plan real-world protests, to conspiracy theorists who gain massive followings. In many cases, those who feel ignored or marginalized in everyday life find a sense of community within these online groups — often without realizing how extreme some views can become.

This challenge has recently intensified with Meta's January 7th announcement that they are removing content moderation across its platforms (Facebook, Instagram, and Threads). This unprecedented shift essentially removes guardrails that previously helped limit the spread of harmful content, potentially accelerating the spread of extremist viewpoints and misinformation. It exemplifies how platform decisions can have profound societal implications, particularly when these platforms serve billions of users worldwide.

Fear and Depression

Scrolling past picture-perfect photos of friends, influencers, and celebrities can spark feelings of inadequacy, leading to anxiety and depression. Teens might compare their own daily struggles to the highlight reels they see online, wondering why everyone else’s life looks so amazing. Meanwhile, platforms often amplify urgent or negative content — like catastrophic news stories — because fear-based headlines keep people glued to their feeds. The result is a constant sense that the world is scary and you’re somehow falling behind.

Misinformation

Unverified rumors can go viral faster than ever, especially if they’re shocking or fit neatly into a particular worldview. We’ve seen false medical advice and conspiracy theories spread about topics like COVID-19, sometimes with dire health consequences. In many cases, people share these posts without checking sources because they’re either well-intentioned (trying to “help” others) or seeking a quick emotional release. This widespread confusion blurs the line between fact and fiction, making it harder for anyone to know what to believe. What makes matters worse is that social media benefits financially from misinformation, but choose not to suppress it.

Measuring Personal & Societal Impact

A number of organization and frameworks, such as B Corp, Global Reporting Initiative (GRI), and ESG Framework (Environment, Social, and Governance), attempt to measure the societal impact of companies and their products or services. These metrics are primarily focused on employment practices, digital rights, energy use, transparency, and more, but don’t directly address product tactics.

I thought it would be interesting to create a societal impact score that focuses on the intent of product features, and the resulting positive or negative impact on individuals and society. You can read more about this Societal Benefit Score.

Below you’ll find a list of dominant social/media services’ feature prominence and a score. In this score, anything less than zero has a negative impact on societal health. Dualingo and Strava, two popular for-profit gamified consumer apps, are also included to show how gamification and behavioral tactics can be leveraged for good, and receive a positive score.

Note: The charts below truncate on mobile click the links below to view the charts in full. Also note that the below chart scrolls side-to-side.

The scatter-plot below looks at the same products with the societal benefit score against the total user base and the amount of value extracted (primarily from paid influence/ad revenue) from a user in one hour of use.

I recognize the scores may not align with individual perceptions of personal or societal value, for example, someone making a living from TikTok in an economically challenging environment will likely experience a highly positive overall impact. It’s also interesting to note that many people (particularly young tech-natives) rationalize dark patterns through a positive light. For example, realtime location sharing is seen as a way to keep friends honest and be more transparent — accepting the impact of FOMO, privacy and safety concerns.

Services like LinkedIn and Substack, which both serve a professional function and provide tremendous value to both companies and individuals, may be unfairly penalized for using dark engagement tactics. But it’s often through looking at the impact the products are making on society as a whole that paints the clearest picture. This is where a broad benefit scoring system, like the one B Corp uses, will show a more complete picture.

What’s Coming

Two converging trends are transforming our relationship with products and services. Personalization combined with AI-powered insights promise to deliver deeply relevant and compelling experiences. In a world where delivering customer value is the objective, this is the holy grail. But in the context of winner-takes all capitalism, the end game is maximizing value extraction.

Imagine this scenario. As AI services become central to your daily routines, both professional and personal, the services build incredibly detailed personalized profiles. Your communications, schedules, locations, family activities, interests, relationships, concerns, medical issues, hopes and dreams — they all become part of a “personalization model” that knows your every need. The personalization allows for value to be delivered preemptively — a perfect new outfit arrives before the holiday party. As AI companies need to further monetize to meet the expectations of their sky-high valuations, ad revenue is the most direct and proven path. Ads begin as inline suggestions (not unlike Google’s paid search results), but slowly move towards “embedded personalized insights” — recommendations tailored to your specific needs. For a premium, brands can pay for AI model influence. The delivery of relevance becomes strategic persuasion. With the model knowing your needs, vulnerabilities, and historic influences, the ad-injected content outweighs general content in the model and is now mapped to your individual brain chemistry. This moves beyond products and services to education, news, politics, social relationships and romantic pursuits.

This scenario is the natural evolution of ad-supported freemium services we have all come to expect. What’s new is the intelligence layer, provided by generative AI, that can execute targeted behavioral tactics, and a service people are willing to freely trade their personalized insights to have. It’s not inevitable — there are other outcomes that can come from increased regulation, competitive pressures, shifting sentiment, or societal outrage/rejection. But as a society, we’re like frogs in a warming cauldron.

Towards Beneficial Outcomes

The seemingly inevitable trajectory of AI will drive many to unplug, and I don’t blame them. Personally, I see the current state as a fascinating paradox of choice with incredible opportunities and equally grave challenges. I see my own role as one of many, who can possibly nudge AI towards human benefit. We are story creatures and choose to manifest our collective visions of the future, whether good or bad. I have a few “solutions” to share, with more on the way.

One of the beneficial visions I’ve been obsessed with since my time at Microsoft is the idea of a personal agent that serves as a protector — I call it a guardian angel agent. It would initially work by shadowing your actions (both online and offline), raising concern for content that appears manipulative — similar to security software, but for AI persuasion. An example I often use is when content makes you angry or scared, there’s a good chance you’re being manipulated by the amygdala hijack — a tactic for instantly deactivating your rational brain. Your agent can alert and redirect your actions. Another example could be a personal agent that helps a teen learn how to develop good habits with social media usage — possibly preventing them from creating a long-term regret.

I’ve been prototyping personal agents and the protocols for negotiating on your behalf (e.g., facilitating an exchange of personal information for a personalized recommendation with a trusted retailer). I’m exploring solutions for private, device-side AI models that will serve only you. I believe one way to navigate the growing complexity and hostility of an AI-powered future, especially an unregulated one, will be using personal agents free from outside influence to filter and regulate the noisy world, helping you maintain control over your digital experience.

Apple is well positioned to support this type of empowered, benign AI service. I won’t hold my breath that Apple will make the right choices, but they are an interesting counterbalance to what looks like a broad movement towards extractive services. I also still hold out hope the vision Microsoft once had could be realized — I still have a couple close colleagues working on related initiatives.

In the near term, it’s critical we move forward with eyes wide open. Social media, entertainment, news and business are all converging into a consolidated and highly optimized attention extraction machine. I like to tell my kids that you can either be creators or a consumers of tech. I also tell them that it’s ok to unplug. There’s a world of magic and wonder in the presence of the natural, living world. Spend time with friends in person, participate in community-building (which is hard work btw — don’t give up), spend time in nature, meditate, and explore the world.

Key Takeaways

- Modern attention-capture systems are fundamentally different from previous eras, using sophisticated algorithms and psychological triggers to continuously harvest our attention. This isn't just about time spent - it's about the quality of our thoughts, decisions, and relationships.

- Social and media platforms are increasingly leveraging our primitive brain circuits through behavioral design. What appears as "engagement" often taps into survival instincts like fear, social comparison, and loss aversion - creating powerful behavioral loops that can be hard to break.

- The convergence of short-form video and AI-powered algorithms is creating unprecedented levels of engagement. While this drives business metrics, it often comes at the cost of mental well-being, genuine social connections, and community resilience.

- The societal impact extends far beyond individual screen time - we're seeing increased polarization, the rapid spread of misinformation, and declining mental health, particularly among young people. These aren't bugs in the system - they're often natural outcomes of engagement-optimized design.

- As AI becomes more sophisticated, the challenge will intensify. Personalized AI models will know not just what content keeps us engaged, but exactly how to influence our behavior through deep understanding of our individual psychological triggers.

- There are paths forward: developing personal AI guardians, supporting regulation of harmful practices, building real-world communities, and making conscious choices about our technology use. The key is moving forward with awareness and intention rather than passive consumption.

Resources

Center for Humane Technology

Tristan Harris foundation for addressing related changes with many resources for parents, kids, and anyone looking for awareness and solutions.

Get Together

Resources created by clever design minded folks for helping spark real, in-person community

Media and Children Resources

From the American Academy of Pediatrics — a collection of recommendations and best practices for kids and teens.

UNESCO AI Ethics

Resources and recommendations for responsible and beneficial uses of AI.

Luiza’s Newsletter

Great resource for following AI ethics, regulation and governance.

Attention Management

A UX-focused research paper I wrote in 2013 on the emerging attention crisis.

Societal Benefit Score

Deeper dive into my ad-hoc framework for scoring the societal impact of gamified apps.

Posted

Jan 8, 2025