Ambient Bionics

The etymology of the word “bionic” is a beautifully simple construct—the blending of bio, Latin for life, and electronic. The term hasn’t been in fashion for some time, likely because of the 1970s obsession with cyborgs, resulting in the kitschy TV series, The Six Million Dollar Man, and followup The Bionic Woman. The term bionic became synonymous with the idea that technology could give normal people superpowers—super speed, super strength, super vision, super intellect. Bionics, along with virtual reality (VR) and augmented reality (AR), have always had a strong association with the enabling hardware and/or its interface.

In the spirit of design thinking, of a less > more philosophy, and a response to tech fatigue, I’d like to share some thinking about an idea I’ll call Ambient Bionics (AmB), which borrows from the term Ambient Intelligence (AmI)—environments that adapt intelligently to human needs and interests. Ambient Bionics is an inside-out view of AmI, the person is enhanced, rather than the environment. Besides its elegance, I chose the word bionic to highlight the nature of endowed superpowers.

The evolution of modern computers, and in particular the web, has created an accelerated reflection and re-evaluation of our transition from modernism to postmodernism that unfolded through the latter half of the 20th century. The shift from a foundation of absolute truth and objectivity towards the disillusionment of seeing ourselves, with all its complexity in our creation, speaks to the value and importance of addressing complexity as we dive deeper into our relationship with technology. The "less is more" ethos is a coupled function of technology’s potential. We are moving away from an operator/machine relationship towards a model that more closely resembles co-working. This shift represents a fundamental change in our perception of what technology is and how it’s used. More on the value of reductionist thinking a bit later.

Where we once were rational animals, now we are feeling computers, emotional machines.

- Sherry Turkle, 1983, The Second Self: Computers & the Human Spirit

In 2011 I joined a floating R&D team at Microsoft under the wing of Blaise Agüera y Arcas. My first assignment was working with the Cortana team (Siri/Alexa competitor), where we developed the language and rules for conversational interaction design and explored the value of natural user interface (NUI) design. Through exploring the long game of virtual assistants, ideas emerged for intelligent agents whose roles expand far beyond the invocation and command modality. The notion of a digital proxy that encapsulates an ever evolving awareness of your needs and expectations emerged.

Around the same time, I was working on gaze-based interaction research with the HoloLens team (when it was still an awkward pile of sensors and headache inducing laser projectors). The HoloLens work, along with my experience with VR, left me unconvinced in the value of wearable displays. I was pretty sure a decent form factor for immersive AR wearables (that I’d wear in public) were at least a decade away—I now feel it will be another decade. The entire pursuit felt very “more is more.”

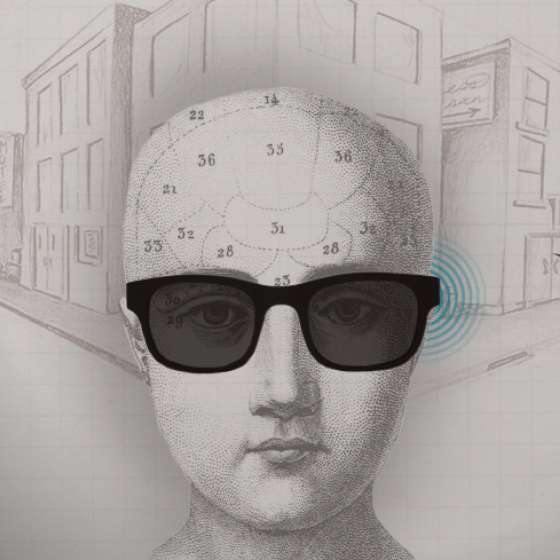

While still at Microsoft, I developed an idea with a co-worker that led to a project we affectionately called “Clippy”—an idea exploring pure audio-based augmented reality. The visual part of AR is often a distraction or a disappointment (or both), and audio felt like a superior choice over screens for many applications. Audio is highly nuanced and filterable, with the ability to control the mix of natural vs. augmented sound reaching the inner ear. Stereo audio can also be highly spatial, with the ability to project a fully three-dimensional sound stage. Audio can also move between conscious and unconscious stimuli—activating awareness without triggering active engagement. With a talented team of audio engineers, immersive audio can be a deeply profound journey. Best of all, the hardware was already available and fairly discrete.

Concept for Cortana-optimized discrete earbuds with active contextual awareness — 2013

While this concept may seem mundane today, keep in mind this was 2013 and the movie “Her” had not come out yet. I intended this proof of concept to show the immediate value of a conversation agent like Cortana, along with some enhancements around contextual understanding and spatial awareness. The value I was excited about is something I describe in my Sixth Sense project—where interaction becomes nuanced and unobtrusive. The result being an additive layer of contextual insight rather than a call and response search tool. Part of what I was trying to solve for was the clear evolution of intelligent agents—shifting the interface from responsive to preemptive.

Another project I intersected, called “The Personal Cloud,” had been active for nearly a decade. The goal of the project at a high level was to invert the ownership of data to its rightful state as the property of the user and provide infrastructure to manage the exchange of data between users and services—thus cutting out the middleman (Google, Facebook, et al.). At its core they built the concept around a foundation of trust and empowerment, as the project would never work without the buy-in from the end-user. The challenge then was building the intelligent and secure “agents” that could adapt to the nuanced expectations of each individual user, plus service endpoints the agents would engage with—all without risk of exploitation.

The Personal Cloud project heavily influenced my thinking about the role intelligent agents will play in our future. Virtual assistants, such as Google Assistant, can already manage more complex real-world tasks like navigating a reservation or negotiating a product purchase. As we navigate an increasingly complex and consequential technology landscape, people will get more comfortable offloading progressively more complex tasks. At the same time, technology will become opaque to most due to many factors related to ubiquitous endpoints with baked-in intelligence. While abstraction will always provide a simple user interface, the underlying complexity will unintelligible. It’s here that the notion of a personal intelligent agent becomes the enabler of personal choice and representation within a highly complex framework. As a cornerstone of ambient intelligence, the personal agent extends far beyond virtual engagement, providing guidance for complex, real-world activities such as augmenting your professional responsibilities or helping you achieve long-term goals.

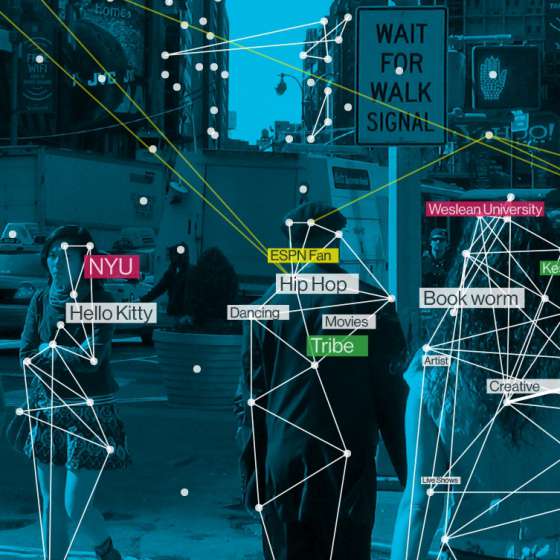

This notion of an intelligent personal agent that adapts to your needs, represents your deeply held beliefs, and protects you from digital threats, is the foundation of ambient bionics. The power of the agent comes from the combination of deep contextual awareness, enabled by a trusted ecosystem of worn and ambient device access, along with broad access to personal services, which give the agent highly relevant data. Along with an intimate understanding of your needs, a clear understanding of engagement etiquette, and an appropriate level of escalation, the agent can act on your behalf, alert you when needed, or subtly nudge you in "the right" direction. Superpowers could manifest as extra-sensory, where one can sense danger, or have a "feeling" about someone that leads to an unexpected moment of serendipity.

This idea of being endowed with an extra or enhanced sense is something I explored in my Sixth Sense and Serendipity Watch projects. It’s also an idea that potentially transcends the ego-centric technology relationship, and embodies an external and expanded awareness. In early video games, a “God view” gave you an expanded world-view, allowing you to see behind walls or map a path to a successful completion. When this extra sense provides guidance to avoid harm, it takes on a new role—as a personal guardian angel. Awareness between personal agents adds even another layer of perception—allowing the network of perception to expand awareness, although this is fraught with privacy issues. This notion of AI as a guardian angel is one of the more optimistic use cases in the march towards general AI.

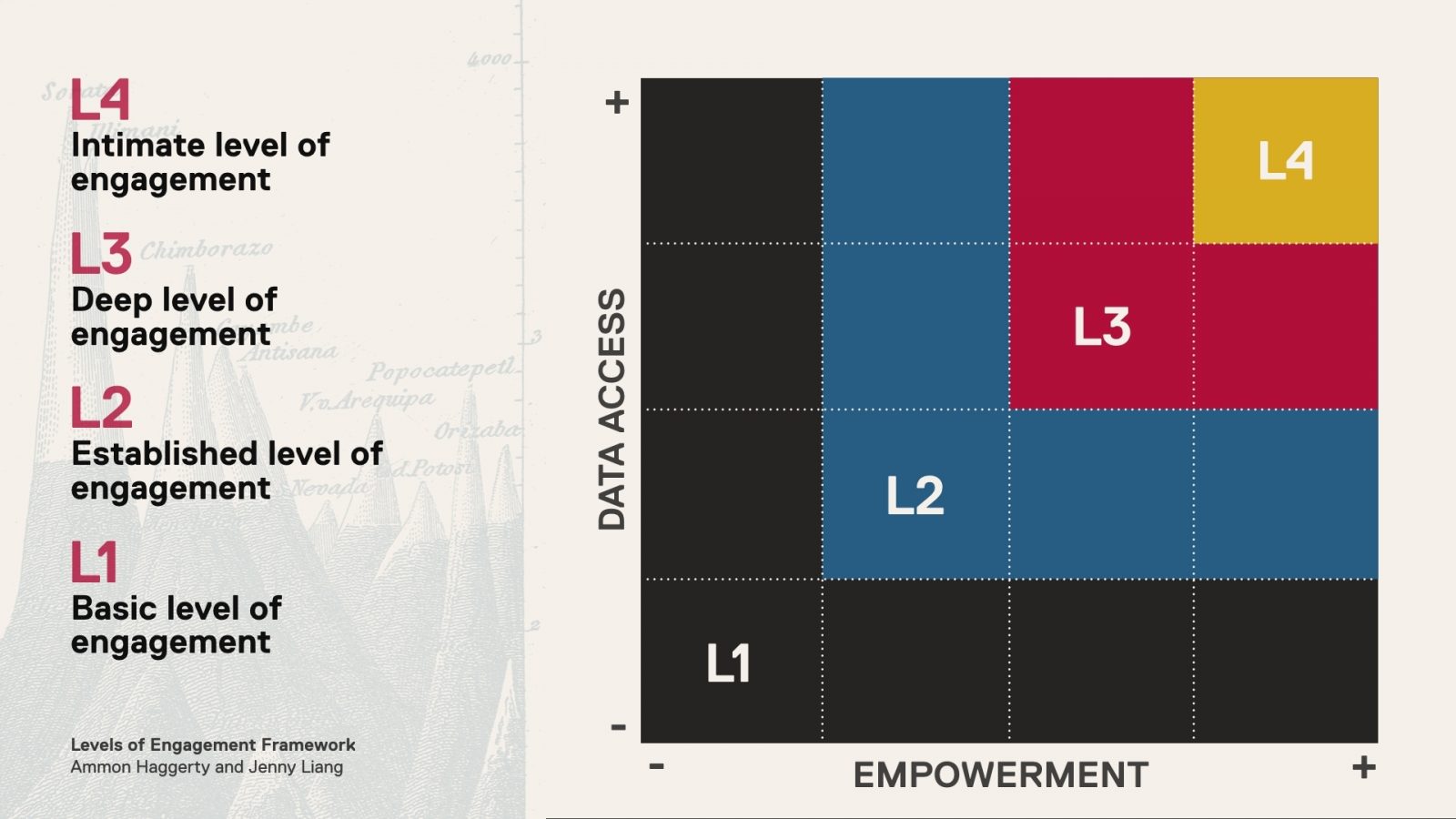

Several critical challenges will need addressing in order to create this vision of a proxy agent as guardian angel. The AI needed for these agents (personal and service) is likely within our grasp now. But I don’t believe the technology can be effective without an ethical foundation that’s grounded in trust and user empowerment. We need an ethical framework to scale care as deeper access unlocks more sensitive an nuanced data. Users of these agents could be deeply vulnerable to manipulation and exploitation. Looking at the analog of a trusted human delegate, perhaps a spouse or parent, we can clearly see some of the requirements related to trust, familiarity, ethical intent, and more. I speak about some of these ideas in my IxDA talk at Interaction19.

We’re already seeing the dangers of exploitation today with our free-for-all, ad-driven, data harvesting approach to creating business value—and we’re still in a predominantly influence-driven modality. Delegating decision making puts a spotlight on ethics, and for many organizations, trust surrounding data use is already severely damaged. In 2020, I see Apple vying for the tech privacy throne, and I still see Microsoft as well positioned to be a trusted arbiter of sensitive data. For all the companies that built their business on the monetization of customer data, the path of rebuilding customer trust is steep.

In the end, my vision of ambient bionics is more about ambience, in the quiet, minimalist and surrounding sense, than bionics. I see the true superpower is the enablement to unplug without tuning out. As a parent, I also see this idea as an alternative to the uber-connected, screen-addicted, always on Gen Z. I worry technology may ultimately have a net-negative effect on humanity, but feel my role while I’m on this earth is to help guide it in a positive direction.

Posted

Nov 11, 2020