The Duality of AI

The notion of a gift, that is both a blessing and a curse, is well-trodden parable fodder. I’ve been reflecting on this duality while looking back on the past year of building AI-powered tools. Talking AI with friends and colleagues often leads to polarized views: solution accelerator vs doombringer, superpower enabler vs job killer, etc. Some of my thoughts about these two sides of AI and my current stance, follows...

Creativity replacement vs creativity tool

BTW, a GPT (Generative Pre-trained Transformer) did not write this. I believe no one really wants to ready articles written by AI. As no one wants to listen to music created by AI. Or watch movies or TV shows generated by AI. Beyond the novelty, as soon is there’s no person to connect with, and relate to, it’s dead. AI is a mirror of ourselves, so the life in it we see is the remnant of the human spirit.

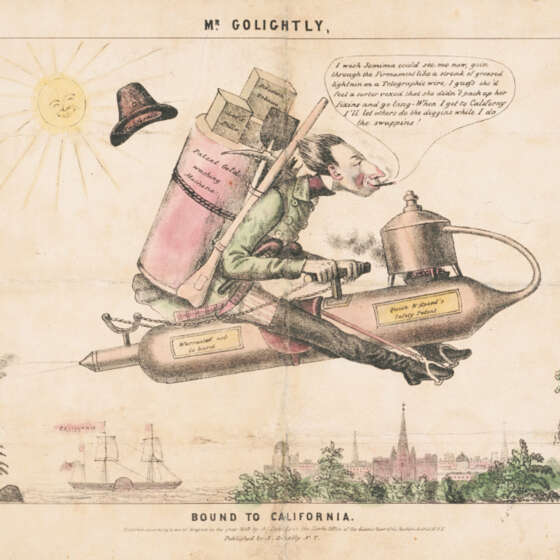

Content creation is a core function of the creative class. Generative AI has threatened creative jobs since day one. It didn’t have to be this way. While OpenAI initially tried to promote AI as creative augmentation, the replacement narrative dominated. Fear drives the narrative. Our opportunity is synergy. Cycles repeat, technologies unlock step changes in ability (for better or worse).

Hype Cycle vs Hype Train

The former happens because of the latter. We’re social creatures and want to flock together. Hype Trains inflate egos and ideas. Hype often (not always) leads to disappointment, resentment, reassessment, improvement, resurrection, then back to hype—that’s the Hype Cycle. Hype Cycles are generational, because it takes that long to forget, although “overwhelm” is quickly replacing “forget.”

A.I. will probably most likely lead to the end of the world, but in the meantime, there’ll be great companies.

- Sam Altman, 2024

The Hype Train isn’t just about engagement, it’s about driving the narrative. What we believe is what we will manifest. We can manifest a tool for the people, or we can manifest a windfall for the few. Hype Trains are the fuel for “get rich quick.” Hype Trains have learned a lot from social media and American politics. Without falling into despair, I’m on the side of social benefit.

Linear vs Exponential?

The past year has had no shortage of armchair AI quarterbacks predicting when AGI (Artificial General Intelligence) would arrive and what would happen if/when it does. It doesn’t help that the experts can’t agree. My old boss, Blaise Agüera y Arcas, pronounced that AGI is already here last year — embracing a very pragmatic definition of “general intelligence.” Then there’s Jaron Lanier and others, who think sentient machines are a silly fantasy. Like many, the drama of OpenAI’s “imminent AGI emergence” sucked me in, but I then realized it was really just a PAS (Problem, Agitate, Solution)—a dark persuasion marketing tactic.

It turns out the belief in AGI is based on an expectation for exponential learning. The reality is the pyramid needs exponential data. Two opposing exponential systems sort of cancel each other out. I say “sort of” because machines can generate their own training data, to the detriment of its exponential potential.

Lately I’ve been leaning more towards Jaron’s view. It’s not that Large Language Models and Generative AI aren’t amazing—they truly are. But as the fog clears, the picture that’s emerging is really just a reflection of ourselves, nothing transcendent. That sentience could emerge from a neuromorphic architecture raises more questions about what constitutes human consciousness than the ability for machines to awaken. It’s not AGI that we should worry about, it’s people.

Agentic Agents vs Personal Agents

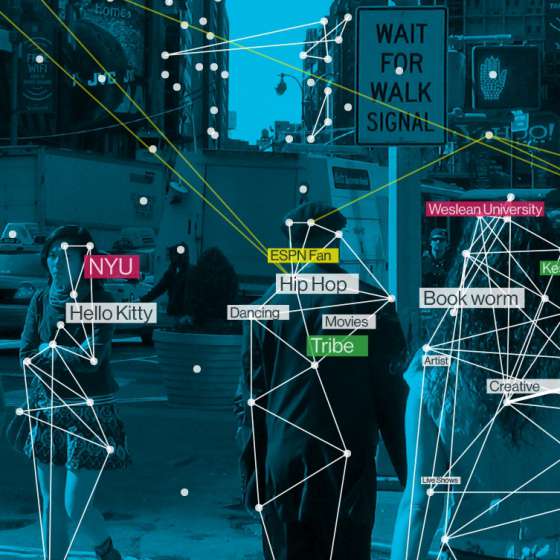

Agents — the next Hype Train building up steam. Two symbiotic parts, one needs data, and the other needs trust. It’s a little personal conflict within the larger AI story. Agentic Agents, like Rabbit’s LAMs (Large Action Models) and Cognition’s “Devin”, are service agents with agency, that learned to “hack” human UIs. Personal Agents, are a lot like the role of people who represent famous and important people — they negotiate on your behalf. This has yet to be unlocked, in large part due to the "trust problem," but I'm starting to see some interesting solutions. Both sides unlock the world of “digital twins.” And as you might imagine, all sorts of funny (not funny) stuff is bound to happen.

Like augmentation, my bias here is human empowerment. YES to delegating a support call (they're bots now anyway). NO to letting your digital twin have all the fun.

Is it worth it?

Let me work it. Let us work it. Let it not work us. -Missy

I'm often asked if AI is actually delivering value to anyone other than the AI companies. When sharing success examples, I’ll exclude the ones that create value by replacing humans. I’d say, true value is when individuals or teams increase their efficiency AND satisfaction in what they do. My next post will share details of true value creation — this one is about duality.

I’ve been lucky to work on a couple amazing projects in the past year that have shown true value—a significant bump in efficiency and satisfaction. I also found another benefit, unlocking capabilities that didn’t exist before—reach. My favorite example is access to a vast, dynamic knowledge graph-like data source, which in itself is a remarkable tool. This is not about generating words, as much as it’s about building knowledge systems and finding patterns in our collective consciousness. More of that later, but my music discovery prototype scratches the surface.

I think OpenAI initially understood that AI belongs to everyone—a common good. What happens when you extract value the many, then turn around and let the few sell it? Eventually, there are only two sides.

Jaron's video below also looks at duality of AI. The “Inversion of AI” is all about data sovereignty and empowerment. He also has a couple of stories about finding true value in AI.

Posted

Apr 9, 2024