In the world of AI and machine learning we see a fascinating design challenge—systems that need to make mistakes to learn. As a father of two daughters, I recognize this approach—clicking all the buttons on the remote, or maybe throwing it. Just like children, if you prevent machine learning systems from making mistakes, you hold back their potential. Engaging playfully with systems that are learning better aligns expectations, gives technology more latitude to explore, and accelerates training.

Serendipity Watch

I was working with an engineer from Sony named Masahiro Shimohori. We were discussing the possibility that AI could orchestrate a serendipitous moment between two people — invisibly nudging both people towards a delightful encounter. He said to me, “The opportunity for serendipity is a half-step ahead of the present.” While I still don’t know exactly what it meant, the words inspired a number of projects exploring playfulness and the subconscious.

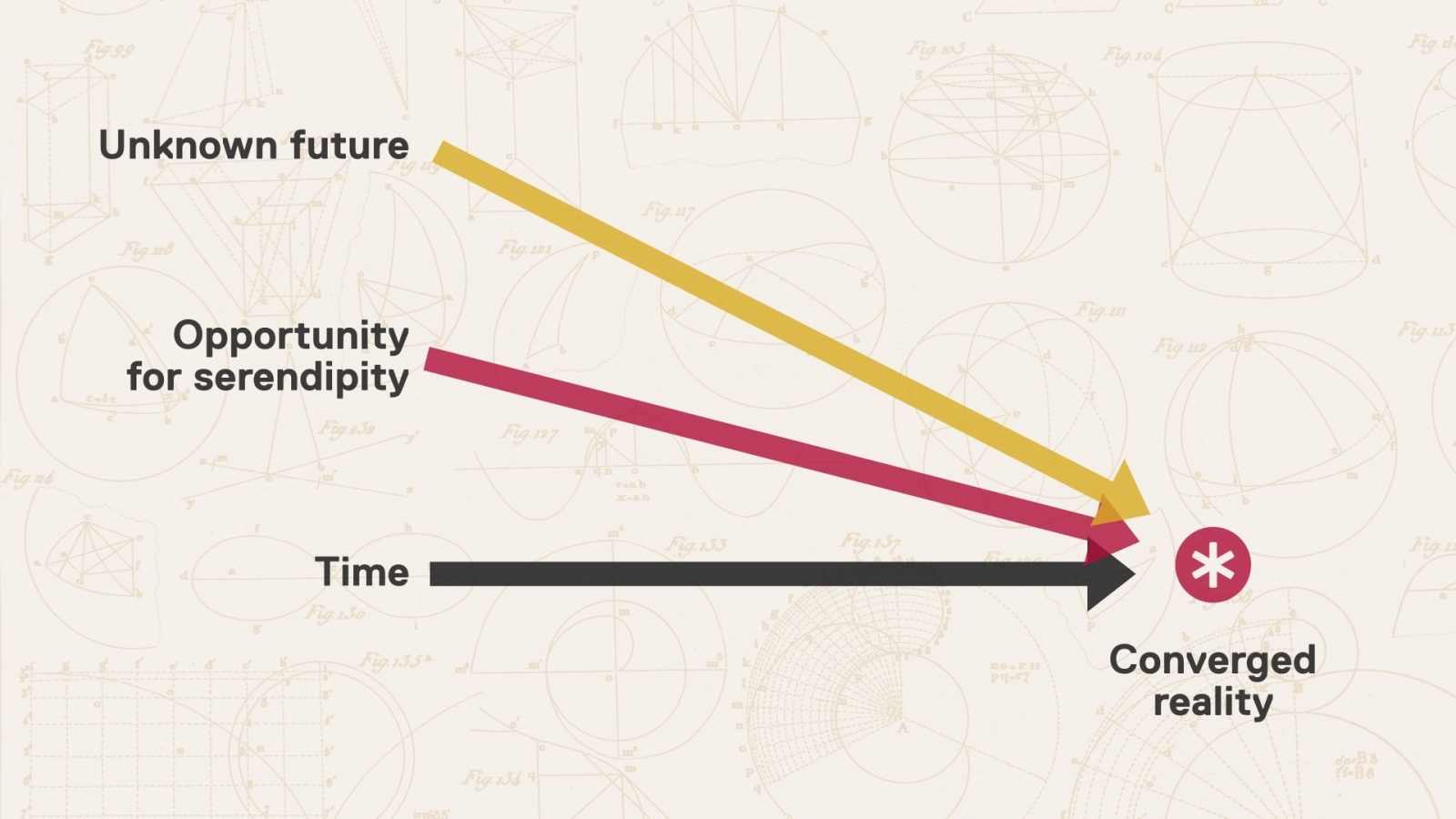

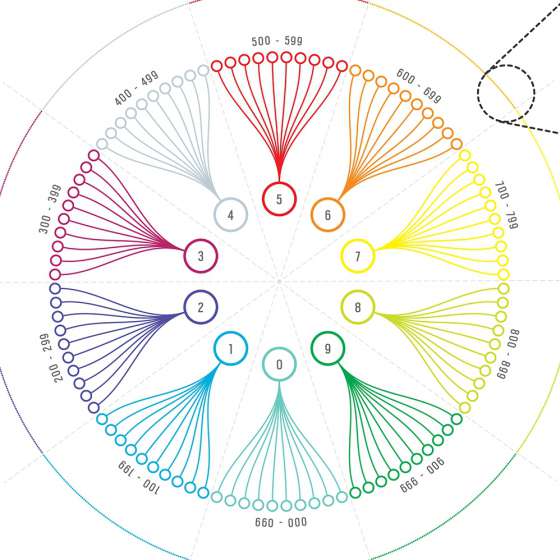

This is what I sketched when heard the quote. I feel the idea gave me permission to experiment more freely with elements of time, space and probabilistic logic.

The image below illustrates machine learning, of AI, model training. It begins with an initial period of learning, which is the "explore" phase. If all goes well, we'll reach an inflection point where we can shift focus to an "exploit" phase. The cost of learning ideally exceeds the baseline value, but the effectiveness of the exploitation phase is highly dependent on the success of the exploration phase. This concept uses both playfulness and user empowerment to improve machine learning performance by testing, and learning from, low-probability hypotheses. This accelerates the "cold start problem", model training and validation.

The “Serendipity Watch” concept was created as a proof of concept to illustrate the power of playfulness in model training. I chose this watch form factor because I like how the bezel provides a compelling interface for controlling time, but other form-factors could also work, such as AR glasses, or simply a phone.

The video begins with a prediction of present moment — the watch observes the current context and factors historic actions. When looking into the future, the watch shows possible future scenarios based on the highest probable action. The probability, presented as a percentage value, can be manually overridden, allowing the user to directly train the AI model.

Things get interesting when you invite others into your experience where possible intersections are found. In this case, a friend has a lower probability of being at a local park at the same time as me. After I increase the likelihood that I'll be there, the friend receives an alert. In response he also increased his likelihood—thus leading to a facilitated opportunity for serendipity.

The further into the future you go, the less probable and more unpredictable the predictions become. In this case, I might be inspired to act on a highly unlikely speculation—possibly leading to a curated trip to a far away destination. Maybe it would even inspire a friend to join me.

While a working prototype was never built, some ideas from this project were used in my Sixth Sense project. This project was also featured in my IxDA Interaction 19 and Interaction 19 // SF Redux talks.