Keep AI Weird

Kyle Turman, creative technologist and staff designer at Anthropic, shared a sentiment that resonated deeply. He said (paraphrasing), “AI is actually really weird, and I don’t think people appreciate that enough.” This sparked my question to the panel: Are we at risk of sanitizing AI’s inherent strangeness?

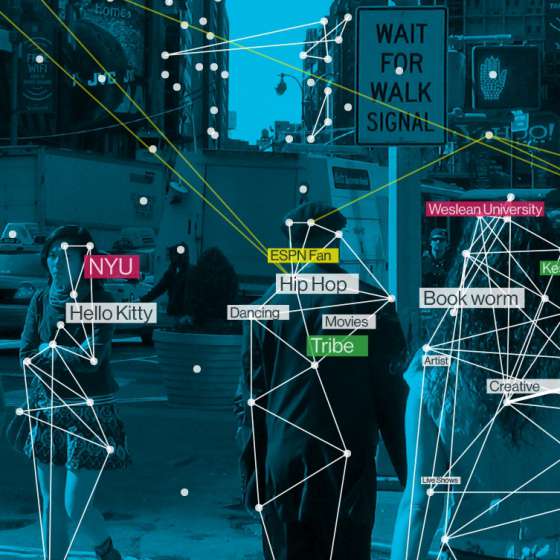

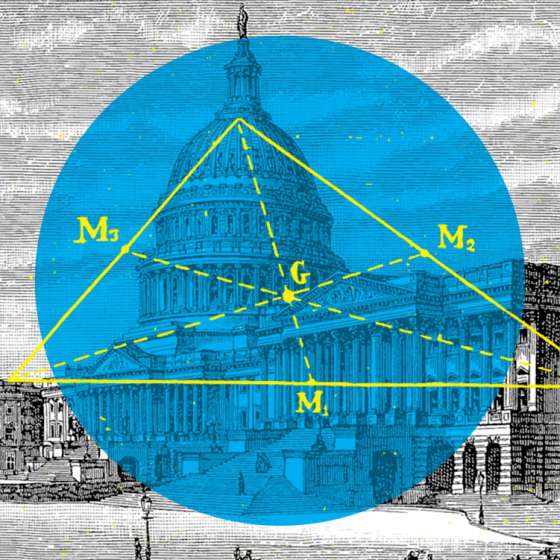

What followed was a fascinating discussion with a couple of friends, Mickey McManus and Noteh Krauss, who were also in attendance. They both recognized the deeper question I was asking — the slippery slope of “cleansing” foundation AI models of all that is undesirable. LLMs are a reflection of humanity, albeit at the moment primarily American and white-ish, with all our weird and idiosyncratic quirks that make us human. There is a real danger that we could see foundation models trained to maximize business values (of the American capitalist variety) and suppress radical and non-conforming ideas — a sort of revisionist optimization.

All this got me thinking about San Francisco, the city I grew up in, and where my dad, grandfather and great-grandfather called home. SF has been “weird” since the gold rush, attracting a melting pot of non-conformists, risk-takers, and radicals. Over generations, the weirdness of SF has ebbed and flowed, but it’s now deeply engrained in the culture. The bohemians, the beats, the hippies, LGBTQ+ rights movement, tech counterculture, and now AI. These are movements born out of counterculture and unconventional thinking, resulting in a disruption of established social and business norms. Eventually leading to mainstreaming, and the cycle repeats. Growing up in San Francisco, I’ve witnessed firsthand how this cycle of weirdness and innovation has shaped the city. It’s a living testament to the power of unconventional thinking.

San Francisco is a mad city – inhabited for the most part by perfectly insane people.

- Rudyard Kipling (1889)

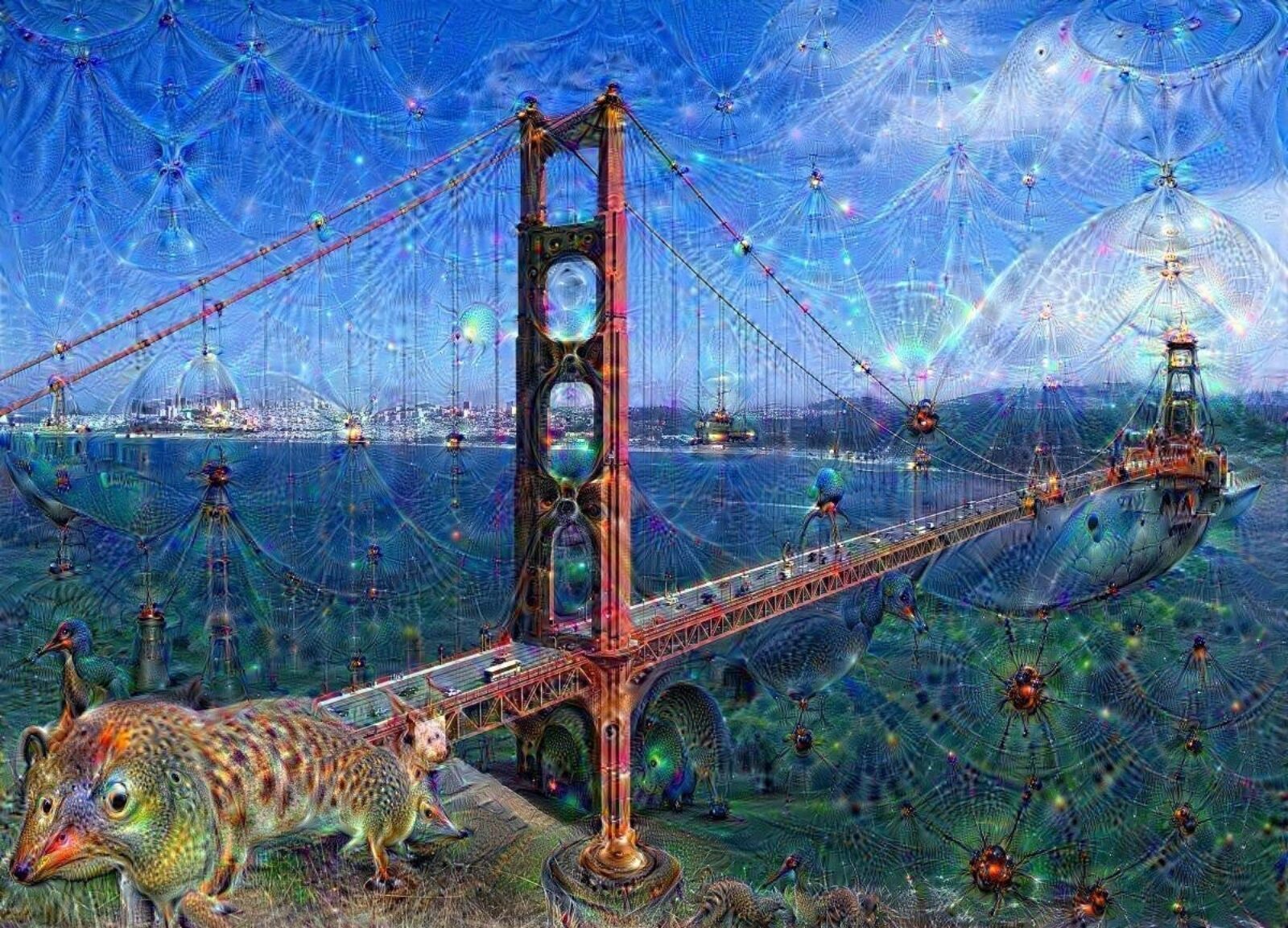

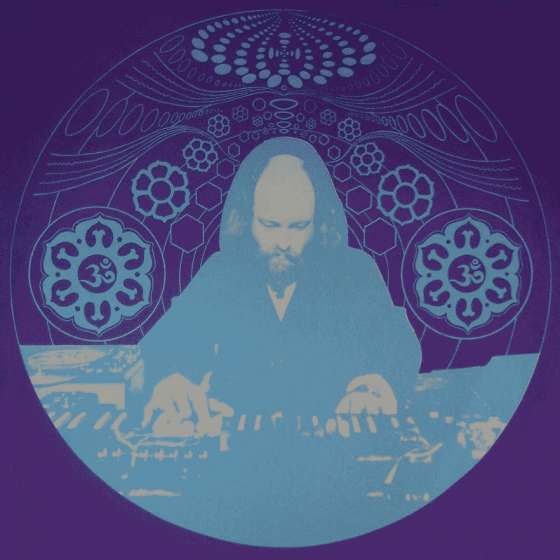

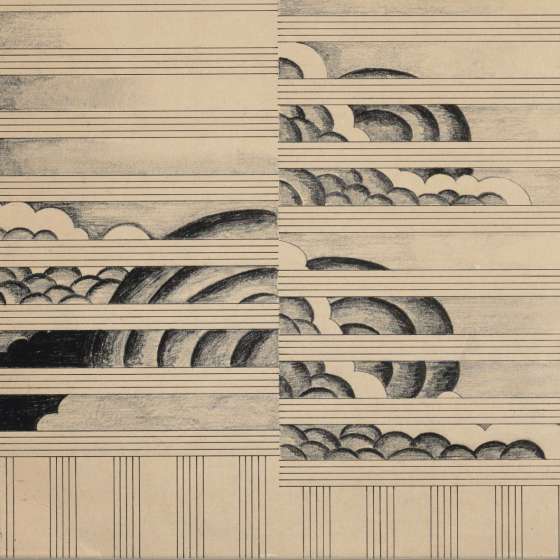

Like San Francisco, AI also has a fairly long history of being weird. Early experiments in AI such as AARON (1972), which trained a basic model on artistic decision-making, created outsider art-like compositions. Racter (1984) was an early text-generating AI that would often produce dreamlike or surrealist output. “More than iron, more than lead, more than gold I need electricity. I need it more than I need lamb or pork or lettuce or cucumber. I need it for my dreams.” More recently, Google Deep Dream (2015), a convolutional neural network that looks for patterns found in its training data, producing hallucination-like images and videos.

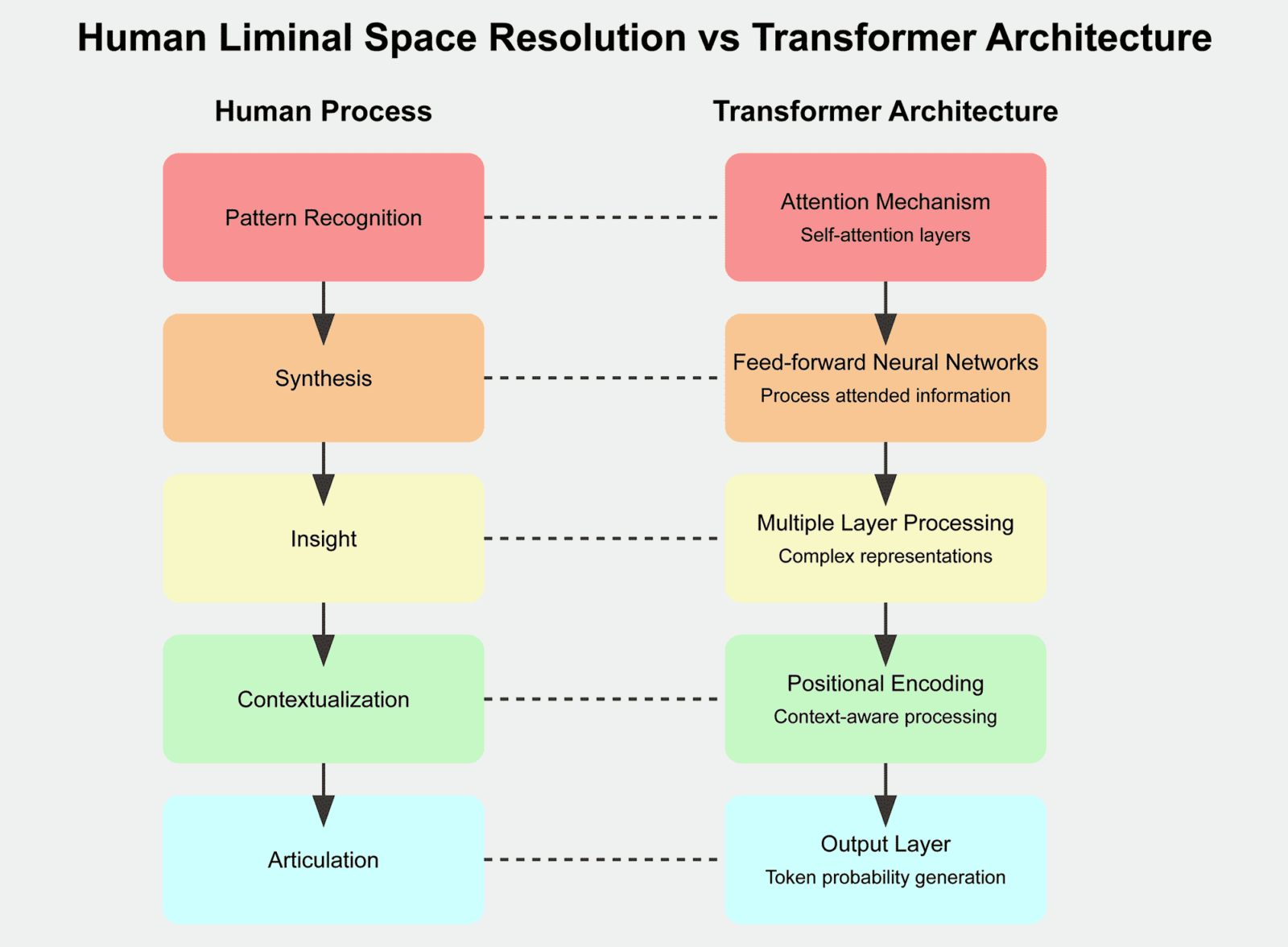

These “edge states” in AI’s evolution are, to me, the most interesting, and human, expressions. It’s a similar edge state explored in human creativity. It’s called “liminal space” — the threshold between reality and imagination. What’s really interesting is the mental process of extracting meaning from the liminal space is highly analogous to how the transformer architecture used in LLMs work. In the human brain, we look for patterns, then synthesize new idea and information, find unexpected connections, contextualize the findings, then articulate the ideas into words we can express. In transformers, the attention mechanism looks for patterns, then neural networks “synthesize” the information, then through iteration and prioritization, form probabilistic insights, then positional encoding maps the information to the broader context, and last, articulates the output as a best guess based on what it knows previously. Sorry if that was dense — for nerd friends to either validate or challenge.

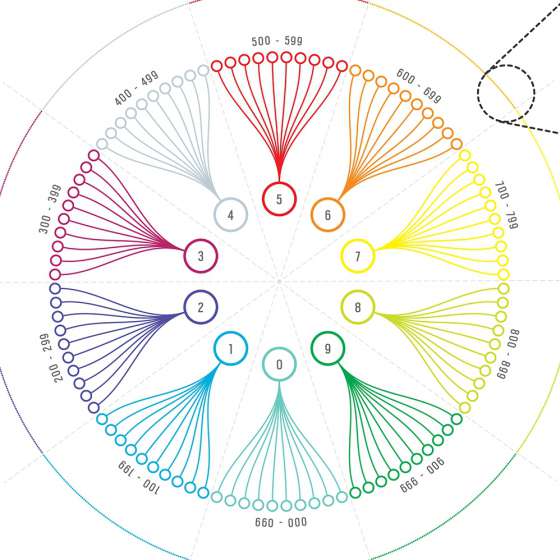

This is all to say that I feel there’s something really interesting in the liminal space for AI. Also known as "AI hallucinations" and it's not good — very bad! I agree that when you ask an AI an important question, and it gives a made-up answer, it’s not a good thing. But it’s not making things up, it’s just synthesizing a highly probable answer from an ambiguous cloud of understanding (question, data, meaning, etc.). I say, let’s explore and celebrate this analog of human creativity. What if, instead of fearing AI’s ‘hallucinations,’ we embraced them as digital dreams? This idea inspired me to create SNDOUT, which explores the liminal space of music knowledge embedded in LLMs.

So I suddenly feel a little more connected to my counterculture friends protesting big tech’s takeover of San Francisco. While I've been vocal about AI's ethical challenges for creators (1) (2), I'm deeply inspired by the creative potential of these new tools. I also fear some of the most interesting parts could begin to disappear. As I consider donning a “Keep AI Weird” t-shirt in solidarity with my counterculture friends, I wonder: Will the next rebellion be human, AI, or a collaboration of both? Whatever form it takes, I hope it keeps us all a little weird.

Posted

Jun 25, 2024